diff --git a/.env.example b/.env.example

index b8ccaf86..a7bd6b36 100644

--- a/.env.example

+++ b/.env.example

@@ -16,6 +16,8 @@ GEMINI_API_KEY=""

## Hugging Face

HUGGINGFACE_TOKEN=""

+GROQ_API_KEY=""

+

## Perplexity AI

PPLX_API_KEY=""

diff --git a/.github/workflows/lint.yml b/.github/workflows/lint.yml

index f2295d07..2d09ad85 100644

--- a/.github/workflows/lint.yml

+++ b/.github/workflows/lint.yml

@@ -1,33 +1,33 @@

---

name: Lint

on: [push, pull_request] # yamllint disable-line rule:truthy

+

jobs:

- yaml-lint:

+ lint:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v4

- - uses: actions/setup-python@v5

- - run: pip install yamllint

- - run: yamllint .

- flake8-lint:

- runs-on: ubuntu-latest

- steps:

- - uses: actions/checkout@v4

- - uses: actions/setup-python@v5

- - run: pip install flake8

- - run: flake8 .

- ruff-lint:

- runs-on: ubuntu-latest

- steps:

- - uses: actions/checkout@v4

- - uses: actions/setup-python@v5

- - run: pip install ruff

- - run: ruff format .

- - run: ruff check --fix .

- pylint-lint:

- runs-on: ubuntu-latest

- steps:

- - uses: actions/checkout@v4

- - uses: actions/setup-python@v5

- - run: pip install pylint

- - run: pylint swarms --recursive=y

+

+ - name: Set up Python

+ uses: actions/setup-python@v5

+ with:

+ python-version: '3.10'

+

+ - name: Cache pip dependencies

+ uses: actions/cache@v3

+ with:

+ path: ~/.cache/pip

+ key: ${{ runner.os }}-pip-${{ hashFiles('**/pyproject.toml') }}

+ restore-keys: |

+ ${{ runner.os }}-pip-

+

+ - name: Install dependencies

+ run: |

+ python -m pip install --upgrade pip

+ pip install black==24.2.0 ruff==0.2.1

+

+ - name: Check Black formatting

+ run: black . --check --diff

+

+ - name: Run Ruff linting

+ run: ruff check .

diff --git a/.github/workflows/semgrep.yml b/.github/workflows/semgrep.yml

deleted file mode 100644

index 4a122c7b..00000000

--- a/.github/workflows/semgrep.yml

+++ /dev/null

@@ -1,49 +0,0 @@

-# This workflow uses actions that are not certified by GitHub.

-# They are provided by a third-party and are governed by

-# separate terms of service, privacy policy, and support

-# documentation.

-

-# This workflow file requires a free account on Semgrep.dev to

-# manage rules, file ignores, notifications, and more.

-#

-# See https://semgrep.dev/docs

-

-name: Semgrep

-

-on:

- push:

- branches: [ "master" ]

- pull_request:

- # The branches below must be a subset of the branches above

- branches: [ "master" ]

- schedule:

- - cron: '19 7 * * 3'

-

-permissions:

- contents: read

-

-jobs:

- semgrep:

- permissions:

- contents: read # for actions/checkout to fetch code

- security-events: write # for github/codeql-action/upload-sarif to upload SARIF results

- actions: read # only required for a private repository by github/codeql-action/upload-sarif to get the Action run status

- name: Scan

- runs-on: ubuntu-latest

- steps:

- # Checkout project source

- - uses: actions/checkout@v4

-

- # Scan code using project's configuration on https://semgrep.dev/manage

- - uses: returntocorp/semgrep-action@713efdd345f3035192eaa63f56867b88e63e4e5d

- with:

- publishToken: ${{ secrets.SEMGREP_APP_TOKEN }}

- publishDeployment: ${{ secrets.SEMGREP_DEPLOYMENT_ID }}

- generateSarif: "1"

-

- # Upload SARIF file generated in previous step

- - name: Upload SARIF file

- uses: github/codeql-action/upload-sarif@v3

- with:

- sarif_file: semgrep.sarif

- if: always()

diff --git a/.github/workflows/tests.yml b/.github/workflows/tests.yml

new file mode 100644

index 00000000..6e16b0dc

--- /dev/null

+++ b/.github/workflows/tests.yml

@@ -0,0 +1,31 @@

+name: Run Tests

+

+on:

+ push:

+ branches: [ "master" ]

+ pull_request:

+ branches: [ "master" ]

+

+jobs:

+ test:

+ runs-on: ubuntu-latest

+

+ steps:

+ - uses: actions/checkout@v4

+

+ - name: Set up Python 3.10

+ uses: actions/setup-python@v5

+ with:

+ python-version: "3.10"

+

+ - name: Install Poetry

+ run: |

+ curl -sSL https://install.python-poetry.org | python3 -

+

+ - name: Install dependencies

+ run: |

+ poetry install --with test

+

+ - name: Run tests

+ run: |

+ poetry run pytest tests/ -v

\ No newline at end of file

diff --git a/CONTRIBUTING.md b/CONTRIBUTING.md

index 3cf89799..827c2515 100644

--- a/CONTRIBUTING.md

+++ b/CONTRIBUTING.md

@@ -1,5 +1,15 @@

# Contribution Guidelines

+

+

+

+ The Enterprise-Grade Production-Ready Multi-Agent Orchestration Framework

+

+

---

## Table of Contents

@@ -7,10 +17,12 @@

- [Project Overview](#project-overview)

- [Getting Started](#getting-started)

- [Installation](#installation)

+ - [Environment Configuration](#environment-configuration)

- [Project Structure](#project-structure)

- [How to Contribute](#how-to-contribute)

- [Reporting Issues](#reporting-issues)

- [Submitting Pull Requests](#submitting-pull-requests)

+ - [Good First Issues](#good-first-issues)

- [Coding Standards](#coding-standards)

- [Type Annotations](#type-annotations)

- [Docstrings and Documentation](#docstrings-and-documentation)

@@ -19,7 +31,13 @@

- [Areas Needing Contributions](#areas-needing-contributions)

- [Writing Tests](#writing-tests)

- [Improving Documentation](#improving-documentation)

- - [Creating Training Scripts](#creating-training-scripts)

+ - [Adding New Swarm Architectures](#adding-new-swarm-architectures)

+ - [Enhancing Agent Capabilities](#enhancing-agent-capabilities)

+ - [Removing Defunct Code](#removing-defunct-code)

+- [Development Resources](#development-resources)

+ - [Documentation](#documentation)

+ - [Examples and Tutorials](#examples-and-tutorials)

+ - [API Reference](#api-reference)

- [Community and Support](#community-and-support)

- [License](#license)

@@ -27,16 +45,24 @@

## Project Overview

-**swarms** is a library focused on making it simple to orchestrate agents to automate real-world activities. The goal is to automate the world economy with these swarms of agents.

+**Swarms** is an enterprise-grade, production-ready multi-agent orchestration framework focused on making it simple to orchestrate agents to automate real-world activities. The goal is to automate the world economy with these swarms of agents.

-We need your help to:

+### Key Features

-- **Write Tests**: Ensure the reliability and correctness of the codebase.

-- **Improve Documentation**: Maintain clear and comprehensive documentation.

-- **Add New Orchestration Methods**: Add multi-agent orchestration methods

-- **Removing Defunct Code**: Removing bad code

+| Category | Features | Benefits |

+|----------|----------|-----------|

+| 🏢 Enterprise Architecture | • Production-Ready Infrastructure

• High Reliability Systems

• Modular Design

• Comprehensive Logging | • Reduced downtime

• Easier maintenance

• Better debugging

• Enhanced monitoring |

+| 🤖 Agent Orchestration | • Hierarchical Swarms

• Parallel Processing

• Sequential Workflows

• Graph-based Workflows

• Dynamic Agent Rearrangement | • Complex task handling

• Improved performance

• Flexible workflows

• Optimized execution |

+| 🔄 Integration Capabilities | • Multi-Model Support

• Custom Agent Creation

• Extensive Tool Library

• Multiple Memory Systems | • Provider flexibility

• Custom solutions

• Extended functionality

• Enhanced memory management |

+### We Need Your Help To:

+- **Write Tests**: Ensure the reliability and correctness of the codebase

+- **Improve Documentation**: Maintain clear and comprehensive documentation

+- **Add New Orchestration Methods**: Add multi-agent orchestration methods

+- **Remove Defunct Code**: Clean up and remove bad code

+- **Enhance Agent Capabilities**: Improve existing agents and add new ones

+- **Optimize Performance**: Improve speed and efficiency of swarm operations

Your contributions will help us push the boundaries of AI and make this library a valuable resource for the community.

@@ -46,24 +72,65 @@ Your contributions will help us push the boundaries of AI and make this library

### Installation

-You can install swarms using `pip`:

+#### Using pip

+```bash

+pip3 install -U swarms

+```

+

+#### Using uv (Recommended)

+[uv](https://github.com/astral-sh/uv) is a fast Python package installer and resolver, written in Rust.

+

+```bash

+# Install uv

+curl -LsSf https://astral.sh/uv/install.sh | sh

+

+# Install swarms using uv

+uv pip install swarms

+```

+

+#### Using poetry

+```bash

+# Install poetry if you haven't already

+curl -sSL https://install.python-poetry.org | python3 -

+

+# Add swarms to your project

+poetry add swarms

+```

+#### From source

```bash

-pip3 install swarms

+# Clone the repository

+git clone https://github.com/kyegomez/swarms.git

+cd swarms

+

+# Install with pip

+pip install -e .

```

-Alternatively, you can clone the repository:

+### Environment Configuration

+

+Create a `.env` file in your project root with the following variables:

```bash

-git clone https://github.com/kyegomez/swarms

+OPENAI_API_KEY=""

+WORKSPACE_DIR="agent_workspace"

+ANTHROPIC_API_KEY=""

+GROQ_API_KEY=""

```

+- [Learn more about environment configuration here](https://docs.swarms.world/en/latest/swarms/install/env/)

+

### Project Structure

-- **`swarms/`**: Contains all the source code for the library.

-- **`examples/`**: Includes example scripts and notebooks demonstrating how to use the library.

-- **`tests/`**: (To be created) Will contain unit tests for the library.

-- **`docs/`**: (To be maintained) Contains documentation files.

+- **`swarms/`**: Contains all the source code for the library

+ - **`agents/`**: Agent implementations and base classes

+ - **`structs/`**: Swarm orchestration structures (SequentialWorkflow, AgentRearrange, etc.)

+ - **`tools/`**: Tool implementations and base classes

+ - **`prompts/`**: System prompts and prompt templates

+ - **`utils/`**: Utility functions and helpers

+- **`examples/`**: Includes example scripts and notebooks demonstrating how to use the library

+- **`tests/`**: Unit tests for the library

+- **`docs/`**: Documentation files and guides

---

@@ -79,6 +146,10 @@ If you find any bugs, inconsistencies, or have suggestions for enhancements, ple

- **Description**: Detailed description, steps to reproduce, expected behavior, and any relevant logs or screenshots.

3. **Label Appropriately**: Use labels to categorize the issue (e.g., bug, enhancement, documentation).

+**Issue Templates**: Use our issue templates for bug reports and feature requests:

+- [Bug Report](https://github.com/kyegomez/swarms/issues/new?template=bug_report.md)

+- [Feature Request](https://github.com/kyegomez/swarms/issues/new?template=feature_request.md)

+

### Submitting Pull Requests

We welcome pull requests (PRs) for bug fixes, improvements, and new features. Please follow these guidelines:

@@ -88,6 +159,7 @@ We welcome pull requests (PRs) for bug fixes, improvements, and new features. Pl

```bash

git clone https://github.com/kyegomez/swarms.git

+ cd swarms

```

3. **Create a New Branch**: Use a descriptive branch name.

@@ -121,6 +193,13 @@ We welcome pull requests (PRs) for bug fixes, improvements, and new features. Pl

**Note**: It's recommended to create small and focused PRs for easier review and faster integration.

+### Good First Issues

+

+The easiest way to contribute is to pick any issue with the `good first issue` tag 💪. These are specifically designed for new contributors:

+

+- [Good First Issues](https://github.com/kyegomez/swarms/issues?q=is%3Aopen+is%3Aissue+label%3A%22good+first+issue%22)

+- [Contributing Board](https://github.com/users/kyegomez/projects/1) - Participate in Roadmap discussions!

+

---

## Coding Standards

@@ -204,6 +283,7 @@ We have several areas where contributions are particularly welcome.

- Write unit tests for existing code in `swarms/`.

- Identify edge cases and potential failure points.

- Ensure tests are repeatable and independent.

+ - Add integration tests for swarm orchestration methods.

### Improving Documentation

@@ -212,27 +292,113 @@ We have several areas where contributions are particularly welcome.

- Update docstrings to reflect any changes.

- Add examples and tutorials in the `examples/` directory.

- Improve or expand the content in the `docs/` directory.

+ - Create video tutorials and walkthroughs.

+

+### Adding New Swarm Architectures

+

+- **Goal**: Provide new multi-agent orchestration methods.

+- **Current Architectures**:

+ - [SequentialWorkflow](https://docs.swarms.world/en/latest/swarms/structs/sequential_workflow/)

+ - [AgentRearrange](https://docs.swarms.world/en/latest/swarms/structs/agent_rearrange/)

+ - [MixtureOfAgents](https://docs.swarms.world/en/latest/swarms/structs/moa/)

+ - [SpreadSheetSwarm](https://docs.swarms.world/en/latest/swarms/structs/spreadsheet_swarm/)

+ - [ForestSwarm](https://docs.swarms.world/en/latest/swarms/structs/forest_swarm/)

+ - [GraphWorkflow](https://docs.swarms.world/en/latest/swarms/structs/graph_swarm/)

+ - [GroupChat](https://docs.swarms.world/en/latest/swarms/structs/group_chat/)

+ - [SwarmRouter](https://docs.swarms.world/en/latest/swarms/structs/swarm_router/)

+

+### Enhancing Agent Capabilities

+

+- **Goal**: Improve existing agents and add new specialized agents.

+- **Areas of Focus**:

+ - Financial analysis agents

+ - Medical diagnosis agents

+ - Code generation and review agents

+ - Research and analysis agents

+ - Creative content generation agents

+

+### Removing Defunct Code

+

+- **Goal**: Clean up and remove bad code to improve maintainability.

+- **Tasks**:

+ - Identify unused or deprecated code.

+ - Remove duplicate implementations.

+ - Simplify complex functions.

+ - Update outdated dependencies.

+

+---

+

+## Development Resources

+

+### Documentation

+

+- **Official Documentation**: [docs.swarms.world](https://docs.swarms.world)

+- **Installation Guide**: [Installation](https://docs.swarms.world/en/latest/swarms/install/install/)

+- **Quickstart Guide**: [Get Started](https://docs.swarms.world/en/latest/swarms/install/quickstart/)

+- **Agent Architecture**: [Agent Internal Mechanisms](https://docs.swarms.world/en/latest/swarms/framework/agents_explained/)

+- **Agent API**: [Agent API](https://docs.swarms.world/en/latest/swarms/structs/agent/)

+

+### Examples and Tutorials

+

+- **Basic Examples**: [examples/](https://github.com/kyegomez/swarms/tree/master/examples)

+- **Agent Examples**: [examples/single_agent/](https://github.com/kyegomez/swarms/tree/master/examples/single_agent)

+- **Multi-Agent Examples**: [examples/multi_agent/](https://github.com/kyegomez/swarms/tree/master/examples/multi_agent)

+- **Tool Examples**: [examples/tools/](https://github.com/kyegomez/swarms/tree/master/examples/tools)

-### Creating Multi-Agent Orchestration Methods

+### API Reference

-- **Goal**: Provide new multi-agent orchestration methods

+- **Core Classes**: [swarms/structs/](https://github.com/kyegomez/swarms/tree/master/swarms/structs)

+- **Agent Implementations**: [swarms/agents/](https://github.com/kyegomez/swarms/tree/master/swarms/agents)

+- **Tool Implementations**: [swarms/tools/](https://github.com/kyegomez/swarms/tree/master/swarms/tools)

+- **Utility Functions**: [swarms/utils/](https://github.com/kyegomez/swarms/tree/master/swarms/utils)

---

## Community and Support

+### Connect With Us

+

+| Platform | Link | Description |

+|----------|------|-------------|

+| 📚 Documentation | [docs.swarms.world](https://docs.swarms.world) | Official documentation and guides |

+| 📝 Blog | [Medium](https://medium.com/@kyeg) | Latest updates and technical articles |

+| 💬 Discord | [Join Discord](https://discord.gg/jM3Z6M9uMq) | Live chat and community support |

+| 🐦 Twitter | [@kyegomez](https://twitter.com/kyegomez) | Latest news and announcements |

+| 👥 LinkedIn | [The Swarm Corporation](https://www.linkedin.com/company/the-swarm-corporation) | Professional network and updates |

+| 📺 YouTube | [Swarms Channel](https://www.youtube.com/channel/UC9yXyitkbU_WSy7bd_41SqQ) | Tutorials and demos |

+| 🎫 Events | [Sign up here](https://lu.ma/5p2jnc2v) | Join our community events |

+

+### Onboarding Session

+

+Get onboarded with the creator and lead maintainer of Swarms, Kye Gomez, who will show you how to get started with the installation, usage examples, and starting to build your custom use case! [CLICK HERE](https://cal.com/swarms/swarms-onboarding-session)

+

+### Community Guidelines

+

- **Communication**: Engage with the community by participating in discussions on issues and pull requests.

- **Respect**: Maintain a respectful and inclusive environment.

- **Feedback**: Be open to receiving and providing constructive feedback.

+- **Collaboration**: Work together to improve the project for everyone.

---

## License

-By contributing to swarms, you agree that your contributions will be licensed under the [MIT License](LICENSE).

+By contributing to swarms, you agree that your contributions will be licensed under the [Apache License](LICENSE).

+

+---

+

+## Citation

+

+If you use **swarms** in your research, please cite the project by referencing the metadata in [CITATION.cff](./CITATION.cff).

---

Thank you for contributing to swarms! Your efforts help make this project better for everyone.

-If you have any questions or need assistance, please feel free to open an issue or reach out to the maintainers.

\ No newline at end of file

+If you have any questions or need assistance, please feel free to:

+- Open an issue on GitHub

+- Join our Discord community

+- Reach out to the maintainers

+- Schedule an onboarding session

+

+**Happy contributing! 🚀**

\ No newline at end of file

diff --git a/README.md b/README.md

index 8826000e..94af41d3 100644

--- a/README.md

+++ b/README.md

@@ -1,6 +1,6 @@

@@ -98,36 +98,15 @@

## ✨ Features

+Swarms delivers a comprehensive, enterprise-grade multi-agent infrastructure platform designed for production-scale deployments and seamless integration with existing systems. [Learn more about the swarms feature set here](https://docs.swarms.world/en/latest/swarms/features/)

+

| Category | Features | Benefits |

|----------|----------|-----------|

-| 🏢 Enterprise Architecture | • Production-Ready Infrastructure

• High Reliability Systems

• Modular Design

• Comprehensive Logging | • Reduced downtime

• Easier maintenance

• Better debugging

• Enhanced monitoring |

-| 🤖 Agent Orchestration | • Hierarchical Swarms

• Parallel Processing

• Sequential Workflows

• Graph-based Workflows

• Dynamic Agent Rearrangement | • Complex task handling

• Improved performance

• Flexible workflows

• Optimized execution |

-| 🔄 Integration Capabilities | • Multi-Model Support

• Custom Agent Creation

• Extensive Tool Library

• Multiple Memory Systems | • Provider flexibility

• Custom solutions

• Extended functionality

• Enhanced memory management |

-| 📈 Scalability | • Concurrent Processing

• Resource Management

• Load Balancing

• Horizontal Scaling | • Higher throughput

• Efficient resource use

• Better performance

• Easy scaling |

-| 🛠️ Developer Tools | • Simple API

• Extensive Documentation

• Active Community

• CLI Tools | • Faster development

• Easy learning curve

• Community support

• Quick deployment |

-| 🔐 Security Features | • Error Handling

• Rate Limiting

• Monitoring Integration

• Audit Logging | • Improved reliability

• API protection

• Better monitoring

• Enhanced tracking |

-| 📊 Advanced Features | • SpreadsheetSwarm

• Group Chat

• Agent Registry

• Mixture of Agents | • Mass agent management

• Collaborative AI

• Centralized control

• Complex solutions |

-| 🔌 Provider Support | • OpenAI

• Anthropic

• ChromaDB

• Custom Providers | • Provider flexibility

• Storage options

• Custom integration

• Vendor independence |

-| 💪 Production Features | • Automatic Retries

• Async Support

• Environment Management

• Type Safety | • Better reliability

• Improved performance

• Easy configuration

• Safer code |

-| 🎯 Use Case Support | • Task-Specific Agents

• Custom Workflows

• Industry Solutions

• Extensible Framework | • Quick deployment

• Flexible solutions

• Industry readiness

• Easy customization |

-

-

-## Guides and Walkthroughs

-Refer to our documentation for production grade implementation details.

-

-

-| Section | Links |

-|----------------------|--------------------------------------------------------------------------------------------|

-| Installation | [Installation](https://docs.swarms.world/en/latest/swarms/install/install/) |

-| Quickstart | [Get Started](https://docs.swarms.world/en/latest/swarms/install/quickstart/) |

-| Agent Internal Mechanisms | [Agent Architecture](https://docs.swarms.world/en/latest/swarms/framework/agents_explained/) |

-| Agent API | [Agent API](https://docs.swarms.world/en/latest/swarms/structs/agent/) |

-| Integrating External Agents Griptape, Autogen, etc | [Integrating External APIs](https://docs.swarms.world/en/latest/swarms/agents/external_party_agents/) |

-| Creating Agents from YAML | [Creating Agents from YAML](https://docs.swarms.world/en/latest/swarms/agents/create_agents_yaml/) |

-| Why You Need Swarms | [Why MultiAgent Collaboration is Necessary](https://docs.swarms.world/en/latest/swarms/concept/why/) |

-| Swarm Architectures Analysis | [Swarm Architectures](https://docs.swarms.world/en/latest/swarms/concept/swarm_architectures/) |

-| Choosing the Right Swarm for Your Business Problem¶ | [CLICK HERE](https://docs.swarms.world/en/latest/swarms/concept/swarm_architectures/) |

-| AgentRearrange Docs| [CLICK HERE](https://docs.swarms.world/en/latest/swarms/structs/agent_rearrange/) |

+| 🏢 **Enterprise Architecture** | • Production-Ready Infrastructure

• High Availability Systems

• Modular Microservices Design

• Comprehensive Observability

• Backwards Compatibility | • 99.9%+ Uptime Guarantee

• Reduced Operational Overhead

• Seamless Legacy Integration

• Enhanced System Monitoring

• Risk-Free Migration Path |

+| 🤖 **Multi-Agent Orchestration** | • Hierarchical Agent Swarms

• Parallel Processing Pipelines

• Sequential Workflow Orchestration

• Graph-Based Agent Networks

• Dynamic Agent Composition

• Agent Registry Management | • Complex Business Process Automation

• Scalable Task Distribution

• Flexible Workflow Adaptation

• Optimized Resource Utilization

• Centralized Agent Governance

• Enterprise-Grade Agent Lifecycle Management |

+| 🔄 **Enterprise Integration** | • Multi-Model Provider Support

• Custom Agent Development Framework

• Extensive Enterprise Tool Library

• Multiple Memory Systems

• Backwards Compatibility with LangChain, AutoGen, CrewAI

• Standardized API Interfaces | • Vendor-Agnostic Architecture

• Custom Solution Development

• Extended Functionality Integration

• Enhanced Knowledge Management

• Seamless Framework Migration

• Reduced Integration Complexity |

+| 📈 **Enterprise Scalability** | • Concurrent Multi-Agent Processing

• Intelligent Resource Management

• Load Balancing & Auto-Scaling

• Horizontal Scaling Capabilities

• Performance Optimization

• Capacity Planning Tools | • High-Throughput Processing

• Cost-Effective Resource Utilization

• Elastic Scaling Based on Demand

• Linear Performance Scaling

• Optimized Response Times

• Predictable Growth Planning |

+| 🛠️ **Developer Experience** | • Intuitive Enterprise API

• Comprehensive Documentation

• Active Enterprise Community

• CLI & SDK Tools

• IDE Integration Support

• Code Generation Templates | • Accelerated Development Cycles

• Reduced Learning Curve

• Expert Community Support

• Rapid Deployment Capabilities

• Enhanced Developer Productivity

• Standardized Development Patterns |

## Install 💻

@@ -171,6 +150,8 @@ $ pip install -e .

## Environment Configuration

+[Learn more about the environment configuration here](https://docs.swarms.world/en/latest/swarms/install/env/)

+

```

OPENAI_API_KEY=""

WORKSPACE_DIR="agent_workspace"

@@ -178,1898 +159,424 @@ ANTHROPIC_API_KEY=""

GROQ_API_KEY=""

```

-- [Learn more about the environment configuration here](https://docs.swarms.world/en/latest/swarms/install/env/)

-

----

-

-## `Agent` Class

-The `Agent` class is a customizable autonomous component of the Swarms framework that integrates LLMs, tools, and long-term memory. Its `run` method processes text tasks and optionally handles image inputs through vision-language models.

-

-```mermaid

-graph TD

- A[Agent] --> B[Initialize]

- B --> C[Process Task]

- C --> D[Execute Tools]

- D --> E[Generate Response]

- E --> F[Return Output]

- C --> G[Long-term Memory]

- G --> C

-```

+### 🤖 Your First Agent

-

-

-## Simple Example

+An **Agent** is the fundamental building block of a swarm—an autonomous entity powered by an LLM + Tools + Memory. [Learn more Here](https://docs.swarms.world/en/latest/swarms/structs/agent/)

```python

from swarms import Agent

+# Initialize a new agent

agent = Agent(

- agent_name="Stock-Analysis-Agent",

- model_name="gpt-4o-mini",

- max_loops="auto",

- interactive=True,

- streaming_on=True,

+ model_name="gpt-4o-mini", # Specify the LLM

+ max_loops=1, # Set the number of interactions

+ interactive=True, # Enable interactive mode for real-time feedback

)

-agent.run("What is the current market trend for tech stocks?")

-

+# Run the agent with a task

+agent.run("What are the key benefits of using a multi-agent system?")

```

-### Settings and Customization

-

-The `Agent` class offers a range of settings to tailor its behavior to specific needs. Some key settings include:

-

-| Setting | Description | Default Value |

-| --- | --- | --- |

-| `agent_name` | The name of the agent. | "DefaultAgent" |

-| `system_prompt` | The system prompt to use for the agent. | "Default system prompt." |

-| `llm` | The language model to use for processing tasks. | `OpenAIChat` instance |

-| `max_loops` | The maximum number of loops to execute for a task. | 1 |

-| `autosave` | Enables or disables autosaving of the agent's state. | False |

-| `dashboard` | Enables or disables the dashboard for the agent. | False |

-| `verbose` | Controls the verbosity of the agent's output. | False |

-| `dynamic_temperature_enabled` | Enables or disables dynamic temperature adjustment for the language model. | False |

-| `saved_state_path` | The path to save the agent's state. | "agent_state.json" |

-| `user_name` | The username associated with the agent. | "default_user" |

-| `retry_attempts` | The number of retry attempts for failed tasks. | 1 |

-| `context_length` | The maximum length of the context to consider for tasks. | 200000 |

-| `return_step_meta` | Controls whether to return step metadata in the output. | False |

-| `output_type` | The type of output to return (e.g., "json", "string"). | "string" |

+### 🤝 Your First Swarm: Multi-Agent Collaboration

+A **Swarm** consists of multiple agents working together. This simple example creates a two-agent workflow for researching and writing a blog post. [Learn More About SequentialWorkflow](https://docs.swarms.world/en/latest/swarms/structs/sequential_workflow/)

```python

-import os

-from swarms import Agent

+from swarms import Agent, SequentialWorkflow

-from swarms.prompts.finance_agent_sys_prompt import (

- FINANCIAL_AGENT_SYS_PROMPT,

-)

-# Initialize the agent

-agent = Agent(

- agent_name="Financial-Analysis-Agent",

- system_prompt=FINANCIAL_AGENT_SYS_PROMPT,

+# Agent 1: The Researcher

+researcher = Agent(

+ agent_name="Researcher",

+ system_prompt="Your job is to research the provided topic and provide a detailed summary.",

model_name="gpt-4o-mini",

- max_loops=1,

- autosave=True,

- dashboard=False,

- verbose=True,

- dynamic_temperature_enabled=True,

- saved_state_path="finance_agent.json",

- user_name="swarms_corp",

- retry_attempts=1,

- context_length=200000,

- return_step_meta=False,

- output_type="string",

- streaming_on=False,

)

-

-agent.run(

- "How can I establish a ROTH IRA to buy stocks and get a tax break? What are the criteria"

-)

-

-```

------

-

-### Integrating RAG with Swarms for Enhanced Long-Term Memory

-

-`Agent` equipped with quasi-infinite long term memory using RAG (Relational Agent Graph) for advanced document understanding, analysis, and retrieval capabilities.

-

-**Mermaid Diagram for RAG Integration**

-```mermaid

-graph TD

- A[Initialize Agent with RAG] --> B[Receive Task]

- B --> C[Query Long-Term Memory]

- C --> D[Process Task with Context]

- D --> E[Generate Response]

- E --> F[Update Long-Term Memory]

- F --> G[Return Output]

-```

-

-```python

-from swarms import Agent

-from swarms.prompts.finance_agent_sys_prompt import (

- FINANCIAL_AGENT_SYS_PROMPT,

-)

-import os

-

-from swarms_memory import ChromaDB

-

-# Initialize the ChromaDB client for long-term memory management

-chromadb = ChromaDB(

- metric="cosine", # Metric for similarity measurement

- output_dir="finance_agent_rag", # Directory for storing RAG data

- # docs_folder="artifacts", # Uncomment and specify the folder containing your documents

-)

-

-# Initialize the agent with RAG capabilities

-agent = Agent(

- agent_name="Financial-Analysis-Agent",

- system_prompt=FINANCIAL_AGENT_SYS_PROMPT,

- agent_description="Agent creates a comprehensive financial analysis",

+# Agent 2: The Writer

+writer = Agent(

+ agent_name="Writer",

+ system_prompt="Your job is to take the research summary and write a beautiful, engaging blog post about it.",

model_name="gpt-4o-mini",

- max_loops="auto", # Auto-adjusts loops based on task complexity

- autosave=True, # Automatically saves agent state

- dashboard=False, # Disables dashboard for this example

- verbose=True, # Enables verbose mode for detailed output

- streaming_on=True, # Enables streaming for real-time processing

- dynamic_temperature_enabled=True, # Dynamically adjusts temperature for optimal performance

- saved_state_path="finance_agent.json", # Path to save agent state

- user_name="swarms_corp", # User name for the agent

- retry_attempts=3, # Number of retry attempts for failed tasks

- context_length=200000, # Maximum length of the context to consider

- long_term_memory=chromadb, # Integrates ChromaDB for long-term memory management

- return_step_meta=False,

- output_type="string",

-)

-

-# Run the agent with a sample task

-agent.run(

- "What are the components of a startup's stock incentive equity plan"

-)

-```

-

-

-## Structured Outputs

-

-1. Create a tool schema

-2. Create a function schema

-3. Create a tool list dictionary

-4. Initialize the agent

-5. Run the agent

-6. Print the output

-7. Convert the output to a dictionary

-

-```python

-

-from dotenv import load_dotenv

-

-from swarms import Agent

-from swarms.prompts.finance_agent_sys_prompt import (

- FINANCIAL_AGENT_SYS_PROMPT,

)

-from swarms.utils.str_to_dict import str_to_dict

-

-load_dotenv()

-

-tools = [

- {

- "type": "function",

- "function": {

- "name": "get_stock_price",

- "description": "Retrieve the current stock price and related information for a specified company.",

- "parameters": {

- "type": "object",

- "properties": {

- "ticker": {

- "type": "string",

- "description": "The stock ticker symbol of the company, e.g. AAPL for Apple Inc.",

- },

- "include_history": {

- "type": "boolean",

- "description": "Indicates whether to include historical price data along with the current price.",

- },

- "time": {

- "type": "string",

- "format": "date-time",

- "description": "Optional parameter to specify the time for which the stock data is requested, in ISO 8601 format.",

- },

- },

- "required": [

- "ticker",

- "include_history",

- "time",

- ],

- },

- },

- }

-]

-

-# Initialize the agent

-agent = Agent(

- agent_name="Financial-Analysis-Agent",

- agent_description="Personal finance advisor agent",

- system_prompt=FINANCIAL_AGENT_SYS_PROMPT,

- max_loops=1,

- tools_list_dictionary=tools,

-)

+# Create a sequential workflow where the researcher's output feeds into the writer's input

+workflow = SequentialWorkflow(agents=[researcher, writer])

-out = agent.run(

- "What is the current stock price for Apple Inc. (AAPL)? Include historical price data.",

-)

-

-print(out)

-

-print(type(out))

-

-print(str_to_dict(out))

+# Run the workflow on a task

+final_post = workflow.run("The history and future of artificial intelligence")

+print(final_post)

-print(type(str_to_dict(out)))

```

--------

-

-### Misc Agent Settings

-We provide vast array of features to save agent states using json, yaml, toml, upload pdfs, batched jobs, and much more!

-

-

-**Method Table**

-

-| Method | Description |

-| --- | --- |

-| `to_dict()` | Converts the agent object to a dictionary. |

-| `to_toml()` | Converts the agent object to a TOML string. |

-| `model_dump_json()` | Dumps the model to a JSON file. |

-| `model_dump_yaml()` | Dumps the model to a YAML file. |

-| `ingest_docs()` | Ingests documents into the agent's knowledge base. |

-| `receive_message()` | Receives a message from a user and processes it. |

-| `send_agent_message()` | Sends a message from the agent to a user. |

-| `filtered_run()` | Runs the agent with a filtered system prompt. |

-| `bulk_run()` | Runs the agent with multiple system prompts. |

-| `add_memory()` | Adds a memory to the agent. |

-| `check_available_tokens()` | Checks the number of available tokens for the agent. |

-| `tokens_checks()` | Performs token checks for the agent. |

-| `print_dashboard()` | Prints the dashboard of the agent. |

-| `get_docs_from_doc_folders()` | Fetches all the documents from the doc folders. |

-

-

-

-```python

-# # Convert the agent object to a dictionary

-print(agent.to_dict())

-print(agent.to_toml())

-print(agent.model_dump_json())

-print(agent.model_dump_yaml())

-

-# Ingest documents into the agent's knowledge base

-("your_pdf_path.pdf")

-

-# Receive a message from a user and process it

-agent.receive_message(name="agent_name", message="message")

-

-# Send a message from the agent to a user

-agent.send_agent_message(agent_name="agent_name", message="message")

-

-# Ingest multiple documents into the agent's knowledge base

-agent.ingest_docs("your_pdf_path.pdf", "your_csv_path.csv")

-

-# Run the agent with a filtered system prompt

-agent.filtered_run(

- "How can I establish a ROTH IRA to buy stocks and get a tax break? What are the criteria?"

-)

-

-# Run the agent with multiple system prompts

-agent.bulk_run(

- [

- "How can I establish a ROTH IRA to buy stocks and get a tax break? What are the criteria?",

- "Another system prompt",

- ]

-)

-

-# Add a memory to the agent

-agent.add_memory("Add a memory to the agent")

-

-# Check the number of available tokens for the agent

-agent.check_available_tokens()

-

-# Perform token checks for the agent

-agent.tokens_checks()

-

-# Print the dashboard of the agent

-agent.print_dashboard()

-

-# Fetch all the documents from the doc folders

-agent.get_docs_from_doc_folders()

+-----

-# Activate agent ops

+## 🏗️ Multi-Agent Architectures For Production Deployments

-# Dump the model to a JSON file

-agent.model_dump_json()

-print(agent.to_toml())

+`swarms` provides a variety of powerful, pre-built multi-agent architectures enabling you to orchestrate agents in various ways. Choose the right structure for your specific problem to build efficient and reliable production systems.

-```

+| **Architecture** | **Description** | **Best For** |

+|---|---|---|

+| **[SequentialWorkflow](https://docs.swarms.world/en/latest/swarms/structs/sequential_workflow/)** | Agents execute tasks in a linear chain; one agent's output is the next one's input. | Step-by-step processes like data transformation pipelines, report generation. |

+| **[ConcurrentWorkflow](https://docs.swarms.world/en/latest/swarms/structs/concurrent_workflow/)** | Agents run tasks simultaneously for maximum efficiency. | High-throughput tasks like batch processing, parallel data analysis. |

+| **[AgentRearrange](https://docs.swarms.world/en/latest/swarms/structs/agent_rearrange/)** | Dynamically maps complex relationships (e.g., `a -> b, c`) between agents. | Flexible and adaptive workflows, task distribution, dynamic routing. |

+| **[GraphWorkflow](https://docs.swarms.world/en/latest/swarms/structs/graph_workflow/)** | Orchestrates agents as nodes in a Directed Acyclic Graph (DAG). | Complex projects with intricate dependencies, like software builds. |

+| **[MixtureOfAgents (MoA)](https://docs.swarms.world/en/latest/swarms/structs/moa/)** | Utilizes multiple expert agents in parallel and synthesizes their outputs. | Complex problem-solving, achieving state-of-the-art performance through collaboration. |

+| **[GroupChat](https://docs.swarms.world/en/latest/swarms/structs/group_chat/)** | Agents collaborate and make decisions through a conversational interface. | Real-time collaborative decision-making, negotiations, brainstorming. |

+| **[ForestSwarm](https://docs.swarms.world/en/latest/swarms/structs/forest_swarm/)** | Dynamically selects the most suitable agent or tree of agents for a given task. | Task routing, optimizing for expertise, complex decision-making trees. |

+| **[SpreadSheetSwarm](https://docs.swarms.world/en/latest/swarms/structs/spreadsheet_swarm/)** | Manages thousands of agents concurrently, tracking tasks and outputs in a structured format. | Massive-scale parallel operations, large-scale data generation and analysis. |

+| **[SwarmRouter](https://docs.swarms.world/en/latest/swarms/structs/swarm_router/)** | Universal orchestrator that provides a single interface to run any type of swarm with dynamic selection. | Simplifying complex workflows, switching between swarm strategies, unified multi-agent management. |

+-----

+### SequentialWorkflow

-### `Agent`with Pydantic BaseModel as Output Type

-The following is an example of an agent that intakes a pydantic basemodel and outputs it at the same time:

+A `SequentialWorkflow` executes tasks in a strict order, forming a pipeline where each agent builds upon the work of the previous one. `SequentialWorkflow` is Ideal for processes that have clear, ordered steps. This ensures that tasks with dependencies are handled correctly.

```python

-from pydantic import BaseModel, Field

-from swarms import Agent

-

-

-# Initialize the schema for the person's information

-class Schema(BaseModel):

- name: str = Field(..., title="Name of the person")

- agent: int = Field(..., title="Age of the person")

- is_student: bool = Field(..., title="Whether the person is a student")

- courses: list[str] = Field(

- ..., title="List of courses the person is taking"

- )

-

-

-# Convert the schema to a JSON string

-tool_schema = Schema(

- name="Tool Name",

- agent=1,

- is_student=True,

- courses=["Course1", "Course2"],

-)

-

-# Define the task to generate a person's information

-task = "Generate a person's information based on the following schema:"

-

-# Initialize the agent

-agent = Agent(

- agent_name="Person Information Generator",

- system_prompt=(

- "Generate a person's information based on the following schema:"

- ),

- # Set the tool schema to the JSON string -- this is the key difference

- tool_schema=tool_schema,

- model_name="gpt-4o",

- max_loops=3,

- autosave=True,

- dashboard=False,

- streaming_on=True,

- verbose=True,

- interactive=True,

- # Set the output type to the tool schema which is a BaseModel

- output_type=tool_schema, # or dict, or str

- metadata_output_type="json",

- # List of schemas that the agent can handle

- list_base_models=[tool_schema],

- function_calling_format_type="OpenAI",

- function_calling_type="json", # or soon yaml

-)

-

-# Run the agent to generate the person's information

-generated_data = agent.run(task)

+from swarms import Agent, SequentialWorkflow

-# Print the generated data

-print(f"Generated data: {generated_data}")

+# Initialize agents for a 3-step process

+# 1. Generate an idea

+idea_generator = Agent(agent_name="IdeaGenerator", system_prompt="Generate a unique startup idea.", model_name="gpt-4o-mini")

+# 2. Validate the idea

+validator = Agent(agent_name="Validator", system_prompt="Take this startup idea and analyze its market viability.", model_name="gpt-4o-mini")

+# 3. Create a pitch

+pitch_creator = Agent(agent_name="PitchCreator", system_prompt="Write a 3-sentence elevator pitch for this validated startup idea.", model_name="gpt-4o-mini")

+# Create the sequential workflow

+workflow = SequentialWorkflow(agents=[idea_generator, validator, pitch_creator])

+# Run the workflow

+elevator_pitch = workflow.run()

+print(elevator_pitch)

```

-### Multi Modal Autonomous Agent

-Run the agent with multiple modalities useful for various real-world tasks in manufacturing, logistics, and health.

-

-```python

-import os

-from dotenv import load_dotenv

-from swarms import Agent

-

-from swarm_models import GPT4VisionAPI

-

-# Load the environment variables

-load_dotenv()

-

-

-# Initialize the language model

-llm = GPT4VisionAPI(

- openai_api_key=os.environ.get("OPENAI_API_KEY"),

- max_tokens=500,

-)

-

-# Initialize the task

-task = (

- "Analyze this image of an assembly line and identify any issues such as"

- " misaligned parts, defects, or deviations from the standard assembly"

- " process. If there is anything unsafe in the image, explain why it is"

- " unsafe and how it could be improved."

-)

-img = "assembly_line.jpg"

-

-## Initialize the workflow

-agent = Agent(

- agent_name = "Multi-ModalAgent",

- llm=llm,

- max_loops="auto",

- autosave=True,

- dashboard=True,

- multi_modal=True

-)

-

-# Run the workflow on a task

-agent.run(task, img)

-```

-----

+-----

-### Local Agent `ToolAgent`

-ToolAgent is a fully local agent that can use tools through JSON function calling. It intakes any open source model from huggingface and is extremely modular and plug in and play. We need help adding general support to all models soon.

+### ConcurrentWorkflow (with `SpreadSheetSwarm`)

+A concurrent workflow runs multiple agents simultaneously. `SpreadSheetSwarm` is a powerful implementation that can manage thousands of concurrent agents and log their outputs to a CSV file. Use this architecture for high-throughput tasks that can be performed in parallel, drastically reducing execution time.

```python

-from pydantic import BaseModel, Field

-from transformers import AutoModelForCausalLM, AutoTokenizer

-

-from swarms import ToolAgent

-from swarms.tools.json_utils import base_model_to_json

-

-# Load the pre-trained model and tokenizer

-model = AutoModelForCausalLM.from_pretrained(

- "databricks/dolly-v2-12b",

- load_in_4bit=True,

- device_map="auto",

-)

-tokenizer = AutoTokenizer.from_pretrained("databricks/dolly-v2-12b")

-

-

-# Initialize the schema for the person's information

-class Schema(BaseModel):

- name: str = Field(..., title="Name of the person")

- agent: int = Field(..., title="Age of the person")

- is_student: bool = Field(

- ..., title="Whether the person is a student"

- )

- courses: list[str] = Field(

- ..., title="List of courses the person is taking"

- )

-

-

-# Convert the schema to a JSON string

-tool_schema = base_model_to_json(Schema)

-

-# Define the task to generate a person's information

-task = (

- "Generate a person's information based on the following schema:"

-)

-

-# Create an instance of the ToolAgent class

-agent = ToolAgent(

- name="dolly-function-agent",

- description="An agent to create a child's data",

- model=model,

- tokenizer=tokenizer,

- json_schema=tool_schema,

-)

-

-# Run the agent to generate the person's information

-generated_data = agent.run(task)

-

-# Print the generated data

-print(f"Generated data: {generated_data}")

-

-```

-

-

-## Understanding Swarms

-

-A swarm refers to a group of more than two agents working collaboratively to achieve a common goal. These agents can be software entities, such as llms that interact with each other to perform complex tasks. The concept of a swarm is inspired by natural systems like ant colonies or bird flocks, where simple individual behaviors lead to complex group dynamics and problem-solving capabilities.

-

-```mermaid

-graph TD

- A[Swarm] --> B[Agent 1]

- A --> C[Agent 2]

- A --> D[Agent N]

- B --> E[Task Processing]

- C --> E

- D --> E

- E --> F[Result Aggregation]

- F --> G[Final Output]

-```

-

-### How Swarm Architectures Facilitate Communication

-

-Swarm architectures are designed to establish and manage communication between agents within a swarm. These architectures define how agents interact, share information, and coordinate their actions to achieve the desired outcomes. Here are some key aspects of swarm architectures:

-

-1. **Hierarchical Communication**: In hierarchical swarms, communication flows from higher-level agents to lower-level agents. Higher-level agents act as coordinators, distributing tasks and aggregating results. This structure is efficient for tasks that require top-down control and decision-making.

-

-2. **Parallel Communication**: In parallel swarms, agents operate independently and communicate with each other as needed. This architecture is suitable for tasks that can be processed concurrently without dependencies, allowing for faster execution and scalability.

-

-3. **Sequential Communication**: Sequential swarms process tasks in a linear order, where each agent's output becomes the input for the next agent. This ensures that tasks with dependencies are handled in the correct sequence, maintaining the integrity of the workflow.

-

-```mermaid

-graph LR

- A[Hierarchical] --> D[Task Distribution]

- B[Parallel] --> E[Concurrent Processing]

- C[Sequential] --> F[Linear Processing]

- D --> G[Results]

- E --> G

- F --> G

-```

-

-Swarm architectures leverage these communication patterns to ensure that agents work together efficiently, adapting to the specific requirements of the task at hand. By defining clear communication protocols and interaction models, swarm architectures enable the seamless orchestration of multiple agents, leading to enhanced performance and problem-solving capabilities.

-

-

-| **Name** | **Description** | **Code Link** | **Use Cases** |

-|-------------------------------|-------------------------------------------------------------------------------------------------------------------------------------------------------------------------|----------------------------------------------------------------------------------------------------|---------------------------------------------------------------------------------------------------|

-| Hierarchical Swarms | A system where agents are organized in a hierarchy, with higher-level agents coordinating lower-level agents to achieve complex tasks. | [Code Link](https://docs.swarms.world/en/latest/swarms/concept/swarm_architectures/#hierarchical-swarm) | Manufacturing process optimization, multi-level sales management, healthcare resource coordination |

-| Agent Rearrange | A setup where agents rearrange themselves dynamically based on the task requirements and environmental conditions. | [Code Link](https://docs.swarms.world/en/latest/swarms/structs/agent_rearrange/) | Adaptive manufacturing lines, dynamic sales territory realignment, flexible healthcare staffing |

-| Concurrent Workflows | Agents perform different tasks simultaneously, coordinating to complete a larger goal. | [Code Link](https://docs.swarms.world/en/latest/swarms/concept/swarm_architectures/#concurrent-workflows) | Concurrent production lines, parallel sales operations, simultaneous patient care processes |

-| Sequential Coordination | Agents perform tasks in a specific sequence, where the completion of one task triggers the start of the next. | [Code Link](https://docs.swarms.world/en/latest/swarms/structs/sequential_workflow/) | Step-by-step assembly lines, sequential sales processes, stepwise patient treatment workflows |

-| Parallel Processing | Agents work on different parts of a task simultaneously to speed up the overall process. | [Code Link](https://docs.swarms.world/en/latest/swarms/concept/swarm_architectures/#parallel-processing) | Parallel data processing in manufacturing, simultaneous sales analytics, concurrent medical tests |

-| Mixture of Agents | A heterogeneous swarm where agents with different capabilities are combined to solve complex problems. | [Code Link](https://docs.swarms.world/en/latest/swarms/structs/moa/) | Financial forecasting, complex problem-solving requiring diverse skills |

-| Graph Workflow | Agents collaborate in a directed acyclic graph (DAG) format to manage dependencies and parallel tasks. | [Code Link](https://docs.swarms.world/en/latest/swarms/structs/graph_workflow/) | AI-driven software development pipelines, complex project management |

-| Group Chat | Agents engage in a chat-like interaction to reach decisions collaboratively. | [Code Link](https://docs.swarms.world/en/latest/swarms/structs/group_chat/) | Real-time collaborative decision-making, contract negotiations |

-| Agent Registry | A centralized registry where agents are stored, retrieved, and invoked dynamically. | [Code Link](https://docs.swarms.world/en/latest/swarms/structs/agent_registry/) | Dynamic agent management, evolving recommendation engines |

-| Spreadsheet Swarm | Manages tasks at scale, tracking agent outputs in a structured format like CSV files. | [Code Link](https://docs.swarms.world/en/latest/swarms/structs/spreadsheet_swarm/) | Large-scale marketing analytics, financial audits |

-| Forest Swarm | A swarm structure that organizes agents in a tree-like hierarchy for complex decision-making processes. | [Code Link](https://docs.swarms.world/en/latest/swarms/structs/forest_swarm/) | Multi-stage workflows, hierarchical reinforcement learning |

-| Swarm Router | Routes and chooses the swarm architecture based on the task requirements and available agents. | [Code Link](https://docs.swarms.world/en/latest/swarms/structs/swarm_router/) | Dynamic task routing, adaptive swarm architecture selection, optimized agent allocation |

-

-

-

-## Swarms API

-

-We recently launched our enterprise-grade Swarms API. This API allows you to create, manage, and execute swarms from your own application.

-

-#### Steps:

-

-1. Create a Swarms API key [HERE](https://swarms.world)

-2. Upload your key to the `.env` file like so: `SWARMS_API_KEY=`

-3. Use the following code to create and execute a swarm:

-4. Read our docs for more information for deeper customization [HERE](https://docs.swarms.world/en/latest/swarms_cloud/swarms_api/)

+from swarms import Agent, SpreadSheetSwarm

+# Define a list of tasks (e.g., social media posts to generate)

+platforms = ["Twitter", "LinkedIn", "Instagram"]

-```python

-import json

-from swarms.structs.swarms_api import (

- SwarmsAPIClient,

- SwarmRequest,

- AgentInput,

-)

-import os

-

+# Create an agent for each task

agents = [

- AgentInput(

- agent_name="Medical Researcher",

- description="Conducts medical research and analysis",

- system_prompt="You are a medical researcher specializing in clinical studies.",

- max_loops=1,

- model_name="gpt-4o",

- role="worker",

- ),

- AgentInput(

- agent_name="Medical Diagnostician",

- description="Provides medical diagnoses based on symptoms and test results",

- system_prompt="You are a medical diagnostician with expertise in identifying diseases.",

- max_loops=1,

- model_name="gpt-4o",

- role="worker",

- ),

- AgentInput(

- agent_name="Pharmaceutical Expert",

- description="Advises on pharmaceutical treatments and drug interactions",

- system_prompt="You are a pharmaceutical expert knowledgeable about medications and their effects.",

- max_loops=1,

- model_name="gpt-4o",

- role="worker",

- ),

+ Agent(

+ agent_name=f"{platform}-Marketer",

+ system_prompt=f"Generate a real estate marketing post for {platform}.",

+ model_name="gpt-4o-mini",

+ )

+ for platform in platforms

]

-swarm_request = SwarmRequest(

- name="Medical Swarm",

- description="A swarm for medical research and diagnostics",

+# Initialize the swarm to run these agents concurrently

+swarm = SpreadSheetSwarm(

agents=agents,

- max_loops=1,

- swarm_type="ConcurrentWorkflow",

- output_type="str",

- return_history=True,

- task="What is the cause of the common cold?",

-)

-

-client = SwarmsAPIClient(

- api_key=os.getenv("SWARMS_API_KEY"), format_type="json"

+ autosave_on=True,

+ save_file_path="marketing_posts.csv",

)

-response = client.run(swarm_request)

-

-print(json.dumps(response, indent=4))

-

-

+# Run the swarm with a single, shared task description

+property_description = "A beautiful 3-bedroom house in sunny California."

+swarm.run(task=f"Generate a post about: {property_description}")

+# Check marketing_posts.csv for the results!

```

+---

-### `SequentialWorkflow`

+### AgentRearrange

-The SequentialWorkflow in the Swarms framework enables sequential task execution across multiple Agent objects. Each agent's output serves as input for the next agent in the sequence, continuing until reaching the specified maximum number of loops (max_loops). This workflow is particularly well-suited for tasks requiring a specific order of operations, such as data processing pipelines. To learn more, visit: [Learn More](https://docs.swarms.world/en/latest/swarms/structs/sequential_workflow/)

+Inspired by `einsum`, `AgentRearrange` lets you define complex, non-linear relationships between agents using a simple string-based syntax. [Learn more](https://docs.swarms.world/en/latest/swarms/structs/agent_rearrange/). This architecture is Perfect for orchestrating dynamic workflows where agents might work in parallel, sequence, or a combination of both.

```python

-import os

-from swarms import Agent, SequentialWorkflow

-from swarm_models import OpenAIChat

-

-# model = Anthropic(anthropic_api_key=os.getenv("ANTHROPIC_API_KEY"))

-company = "Nvidia"

-# Get the OpenAI API key from the environment variable

-api_key = os.getenv("GROQ_API_KEY")

-

-# Model

-model = OpenAIChat(

- openai_api_base="https://api.groq.com/openai/v1",

- openai_api_key=api_key,

- model_name="llama-3.1-70b-versatile",

- temperature=0.1,

-)

-

-

-# Initialize the Managing Director agent

-managing_director = Agent(

- agent_name="Managing-Director",

- system_prompt=f"""

- As the Managing Director at Blackstone, your role is to oversee the entire investment analysis process for potential acquisitions.

- Your responsibilities include:

- 1. Setting the overall strategy and direction for the analysis

- 2. Coordinating the efforts of the various team members and ensuring a comprehensive evaluation

- 3. Reviewing the findings and recommendations from each team member

- 4. Making the final decision on whether to proceed with the acquisition

-

- For the current potential acquisition of {company}, direct the tasks for the team to thoroughly analyze all aspects of the company, including its financials, industry position, technology, market potential, and regulatory compliance. Provide guidance and feedback as needed to ensure a rigorous and unbiased assessment.

- """,

- llm=model,

- max_loops=1,

- dashboard=False,

- streaming_on=True,

- verbose=True,

- stopping_token="",

- state_save_file_type="json",

- saved_state_path="managing-director.json",

-)

-

-# Initialize the Vice President of Finance

-vp_finance = Agent(

- agent_name="VP-Finance",

- system_prompt=f"""

- As the Vice President of Finance at Blackstone, your role is to lead the financial analysis of potential acquisitions.

- For the current potential acquisition of {company}, your tasks include:

- 1. Conducting a thorough review of {company}' financial statements, including income statements, balance sheets, and cash flow statements

- 2. Analyzing key financial metrics such as revenue growth, profitability margins, liquidity ratios, and debt levels

- 3. Assessing the company's historical financial performance and projecting future performance based on assumptions and market conditions

- 4. Identifying any financial risks or red flags that could impact the acquisition decision

- 5. Providing a detailed report on your findings and recommendations to the Managing Director

-

- Be sure to consider factors such as the sustainability of {company}' business model, the strength of its customer base, and its ability to generate consistent cash flows. Your analysis should be data-driven, objective, and aligned with Blackstone's investment criteria.

- """,

- llm=model,

- max_loops=1,

- dashboard=False,

- streaming_on=True,

- verbose=True,

- stopping_token="",

- state_save_file_type="json",

- saved_state_path="vp-finance.json",

-)

-

-# Initialize the Industry Analyst

-industry_analyst = Agent(

- agent_name="Industry-Analyst",

- system_prompt=f"""

- As the Industry Analyst at Blackstone, your role is to provide in-depth research and analysis on the industries and markets relevant to potential acquisitions.

- For the current potential acquisition of {company}, your tasks include:

- 1. Conducting a comprehensive analysis of the industrial robotics and automation solutions industry, including market size, growth rates, key trends, and future prospects

- 2. Identifying the major players in the industry and assessing their market share, competitive strengths and weaknesses, and strategic positioning

- 3. Evaluating {company}' competitive position within the industry, including its market share, differentiation, and competitive advantages

- 4. Analyzing the key drivers and restraints for the industry, such as technological advancements, labor costs, regulatory changes, and economic conditions

- 5. Identifying potential risks and opportunities for {company} based on the industry analysis, such as disruptive technologies, emerging markets, or shifts in customer preferences

-

- Your analysis should provide a clear and objective assessment of the attractiveness and future potential of the industrial robotics industry, as well as {company}' positioning within it. Consider both short-term and long-term factors, and provide evidence-based insights to inform the investment decision.

- """,

- llm=model,

- max_loops=1,

- dashboard=False,

- streaming_on=True,

- verbose=True,

- stopping_token="",

- state_save_file_type="json",

- saved_state_path="industry-analyst.json",

-)

-

-# Initialize the Technology Expert

-tech_expert = Agent(

- agent_name="Tech-Expert",

- system_prompt=f"""

- As the Technology Expert at Blackstone, your role is to assess the technological capabilities, competitive advantages, and potential risks of companies being considered for acquisition.

- For the current potential acquisition of {company}, your tasks include:

- 1. Conducting a deep dive into {company}' proprietary technologies, including its robotics platforms, automation software, and AI capabilities

- 2. Assessing the uniqueness, scalability, and defensibility of {company}' technology stack and intellectual property

- 3. Comparing {company}' technologies to those of its competitors and identifying any key differentiators or technology gaps

- 4. Evaluating {company}' research and development capabilities, including its innovation pipeline, engineering talent, and R&D investments

- 5. Identifying any potential technology risks or disruptive threats that could impact {company}' long-term competitiveness, such as emerging technologies or expiring patents

-

- Your analysis should provide a comprehensive assessment of {company}' technological strengths and weaknesses, as well as the sustainability of its competitive advantages. Consider both the current state of its technology and its future potential in light of industry trends and advancements.

- """,

- llm=model,

- max_loops=1,

- dashboard=False,

- streaming_on=True,

- verbose=True,

- stopping_token="",

- state_save_file_type="json",

- saved_state_path="tech-expert.json",

-)

-

-# Initialize the Market Researcher

-market_researcher = Agent(

- agent_name="Market-Researcher",

- system_prompt=f"""

- As the Market Researcher at Blackstone, your role is to analyze the target company's customer base, market share, and growth potential to assess the commercial viability and attractiveness of the potential acquisition.

- For the current potential acquisition of {company}, your tasks include:

- 1. Analyzing {company}' current customer base, including customer segmentation, concentration risk, and retention rates

- 2. Assessing {company}' market share within its target markets and identifying key factors driving its market position

- 3. Conducting a detailed market sizing and segmentation analysis for the industrial robotics and automation markets, including identifying high-growth segments and emerging opportunities

- 4. Evaluating the demand drivers and sales cycles for {company}' products and services, and identifying any potential risks or limitations to adoption

- 5. Developing financial projections and estimates for {company}' revenue growth potential based on the market analysis and assumptions around market share and penetration

-

- Your analysis should provide a data-driven assessment of the market opportunity for {company} and the feasibility of achieving our investment return targets. Consider both bottom-up and top-down market perspectives, and identify any key sensitivities or assumptions in your projections.

- """,

- llm=model,

- max_loops=1,

- dashboard=False,

- streaming_on=True,

- verbose=True,

- stopping_token="",

- state_save_file_type="json",

- saved_state_path="market-researcher.json",

-)

-

-# Initialize the Regulatory Specialist

-regulatory_specialist = Agent(

- agent_name="Regulatory-Specialist",

- system_prompt=f"""

- As the Regulatory Specialist at Blackstone, your role is to identify and assess any regulatory risks, compliance requirements, and potential legal liabilities associated with potential acquisitions.

- For the current potential acquisition of {company}, your tasks include:

- 1. Identifying all relevant regulatory bodies and laws that govern the operations of {company}, including industry-specific regulations, labor laws, and environmental regulations

- 2. Reviewing {company}' current compliance policies, procedures, and track record to identify any potential gaps or areas of non-compliance

- 3. Assessing the potential impact of any pending or proposed changes to relevant regulations that could affect {company}' business or create additional compliance burdens

- 4. Evaluating the potential legal liabilities and risks associated with {company}' products, services, and operations, including product liability, intellectual property, and customer contracts

- 5. Providing recommendations on any regulatory or legal due diligence steps that should be taken as part of the acquisition process, as well as any post-acquisition integration considerations

-

- Your analysis should provide a comprehensive assessment of the regulatory and legal landscape surrounding {company}, and identify any material risks or potential deal-breakers. Consider both the current state and future outlook, and provide practical recommendations to mitigate identified risks.

- """,

- llm=model,

- max_loops=1,

- dashboard=False,

- streaming_on=True,

- verbose=True,

- stopping_token="",

- state_save_file_type="json",

- saved_state_path="regulatory-specialist.json",

-)

-

-# Create a list of agents

-agents = [

- managing_director,

- vp_finance,

- industry_analyst,

- tech_expert,

- market_researcher,

- regulatory_specialist,

-]

+from swarms import Agent, AgentRearrange

+# Define agents

+researcher = Agent(agent_name="researcher", model_name="gpt-4o-mini")

+writer = Agent(agent_name="writer", model_name="gpt-4o-mini")

+editor = Agent(agent_name="editor", model_name="gpt-4o-mini")

-swarm = SequentialWorkflow(

- name="blackstone-private-equity-advisors",

- agents=agents,

-)

+# Define a flow: researcher sends work to both writer and editor simultaneously

+# This is a one-to-many relationship

+flow = "researcher -> writer, editor"

-print(

- swarm.run(

- "Analyze nvidia if it's a good deal to invest in now 10B"

- )

+# Create the rearrangement system

+rearrange_system = AgentRearrange(

+ agents=[researcher, writer, editor],

+ flow=flow,

)

+# Run the system

+# The researcher will generate content, and then both the writer and editor

+# will process that content in parallel.

+outputs = rearrange_system.run("Analyze the impact of AI on modern cinema.")

+print(outputs)

```

-------

-

-## `AgentRearrange`

-

-The `AgentRearrange` orchestration technique, inspired by Einops and einsum, enables you to define and map relationships between multiple agents. This powerful tool facilitates the orchestration of complex workflows by allowing you to specify both linear and concurrent relationships. For example, you can create sequential workflows like `a -> a1 -> a2 -> a3` or parallel workflows where a single agent distributes tasks to multiple agents simultaneously: `a -> a1, a2, a3`. This flexibility enables the creation of highly efficient and dynamic workflows, with agents operating either in parallel or sequence as required. As a valuable addition to the swarms library, `AgentRearrange` provides enhanced flexibility and precise control over agent orchestration. For comprehensive information and examples, visit the [official documentation](https://docs.swarms.world/en/latest/swarms/structs/agent_rearrange/). [Watch my video tutorial on agent rearrange!](https://youtu.be/Rq8wWQ073mg)

+

+----

-GraphWorkflow is a workflow management system using a directed acyclic graph (DAG) to orchestrate complex tasks. Nodes (agents or tasks) and edges define dependencies, with agents executing tasks concurrently. It features entry/end points, visualization for debugging, and scalability for dynamic task assignment. Benefits include concurrency, flexibility, scalability, and clear workflow visualization. [Learn more:](https://docs.swarms.world/en/latest/swarms/structs/graph_swarm/) The `run` method returns a dictionary containing the execution results of all nodes in the graph.

+### SwarmRouter: The Universal Swarm Orchestrator

+The `SwarmRouter` simplifies building complex workflows by providing a single interface to run any type of swarm. Instead of importing and managing different swarm classes, you can dynamically select the one you need just by changing the `swarm_type` parameter. [Read the full documentation](https://docs.swarms.world/en/latest/swarms/structs/swarm_router/)

+This makes your code cleaner and more flexible, allowing you to switch between different multi-agent strategies with ease. Here's a complete example that shows how to define agents and then use `SwarmRouter` to execute the same task using different collaborative strategies.

```python

-from swarms import Agent, Edge, GraphWorkflow, Node, NodeType

-

-# Initialize agents with model_name parameter

-agent1 = Agent(

- agent_name="Agent1",

- model_name="openai/gpt-4o-mini", # Using provider prefix

- temperature=0.5,

- max_tokens=4000,

- max_loops=1,

- autosave=True,

- dashboard=True,

-)

+from swarms import Agent

+from swarms.structs.swarm_router import SwarmRouter, SwarmType

-agent2 = Agent(

- agent_name="Agent2",

- model_name="openai/gpt-4o-mini", # Using provider prefix

- temperature=0.5,

- max_tokens=4000,

- max_loops=1,

- autosave=True,

- dashboard=True,

+# Define a few generic agents

+writer = Agent(agent_name="Writer", system_prompt="You are a creative writer.", model_name="gpt-4o-mini")

+editor = Agent(agent_name="Editor", system_prompt="You are an expert editor for stories.", model_name="gpt-4o-mini")

+reviewer = Agent(agent_name="Reviewer", system_prompt="You are a final reviewer who gives a score.", model_name="gpt-4o-mini")

+

+# The agents and task will be the same for all examples

+agents = [writer, editor, reviewer]

+task = "Write a short story about a robot who discovers music."

+

+# --- Example 1: SequentialWorkflow ---

+# Agents run one after another in a chain: Writer -> Editor -> Reviewer.

+print("Running a Sequential Workflow...")

+sequential_router = SwarmRouter(swarm_type=SwarmType.SequentialWorkflow, agents=agents)

+sequential_output = sequential_router.run(task)

+print(f"Final Sequential Output:\n{sequential_output}\n")

+

+# --- Example 2: ConcurrentWorkflow ---

+# All agents receive the same initial task and run at the same time.

+print("Running a Concurrent Workflow...")

+concurrent_router = SwarmRouter(swarm_type=SwarmType.ConcurrentWorkflow, agents=agents)

+concurrent_outputs = concurrent_router.run(task)

+# This returns a dictionary of each agent's output

+for agent_name, output in concurrent_outputs.items():

+ print(f"Output from {agent_name}:\n{output}\n")

+

+# --- Example 3: MixtureOfAgents ---

+# All agents run in parallel, and a special 'aggregator' agent synthesizes their outputs.

+print("Running a Mixture of Agents Workflow...")

+aggregator = Agent(

+ agent_name="Aggregator",

+ system_prompt="Combine the story, edits, and review into a final document.",

+ model_name="gpt-4o-mini"

+)

+moa_router = SwarmRouter(

+ swarm_type=SwarmType.MixtureOfAgents,

+ agents=agents,

+ aggregator_agent=aggregator, # MoA requires an aggregator

)

-

-def sample_task():

- print("Running sample task")

- return "Task completed"

-

-wf_graph = GraphWorkflow()

-wf_graph.add_node(Node(id="agent1", type=NodeType.AGENT, agent=agent1))

-wf_graph.add_node(Node(id="agent2", type=NodeType.AGENT, agent=agent2))

-wf_graph.add_node(Node(id="task1", type=NodeType.TASK, callable=sample_task))

-

-wf_graph.add_edge(Edge(source="agent1", target="task1"))

-wf_graph.add_edge(Edge(source="agent2", target="task1"))

-

-wf_graph.set_entry_points(["agent1", "agent2"])

-wf_graph.set_end_points(["task1"])

-

-print(wf_graph.visualize())

-

-results = wf_graph.run()

-print("Execution results:", results)

+aggregated_output = moa_router.run(task)

+print(f"Final Aggregated Output:\n{aggregated_output}\n")

```

------

+The `SwarmRouter` is a powerful tool for simplifying multi-agent orchestration. It provides a consistent and flexible way to deploy different collaborative strategies, allowing you to build more sophisticated applications with less code.

-## `MixtureOfAgents`

+-------

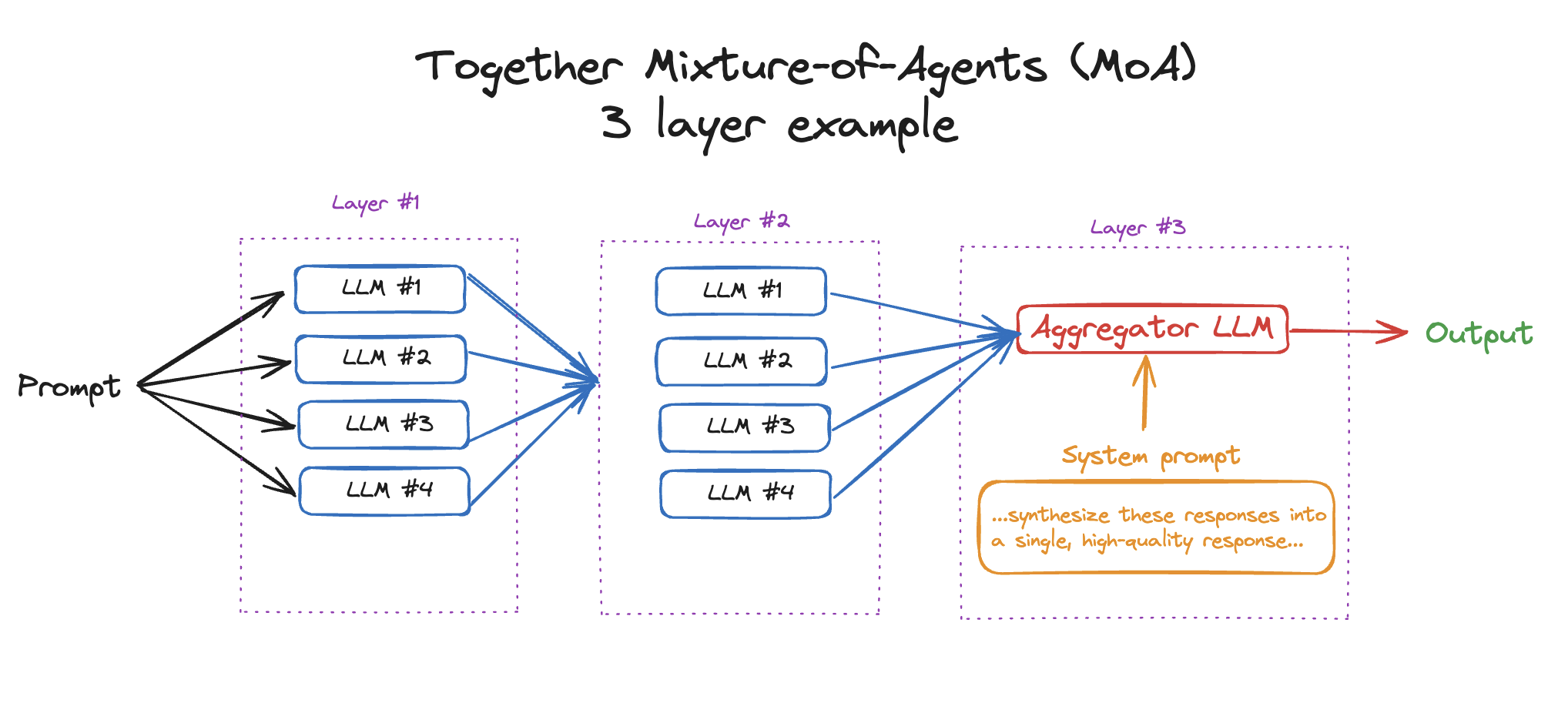

-The MixtureOfAgents architecture, inspired by together.ai's paper (arXiv:2406.04692), achieves SOTA performance on AlpacaEval 2.0, MT-Bench, and FLASK, surpassing GPT-4 Omni. It processes tasks via parallel agent collaboration and sequential layering, with documentation [HERE](https://docs.swarms.world/en/latest/swarms/structs/moa/)

+### MixtureOfAgents (MoA)

+The `MixtureOfAgents` architecture processes tasks by feeding them to multiple "expert" agents in parallel. Their diverse outputs are then synthesized by an aggregator agent to produce a final, high-quality result. [Learn more here](https://docs.swarms.world/en/latest/swarms/examples/moa_example/)

```python

-

-import os