- -

-

-

- -

-TheBloke's LLM work is generously supported by a grant from andreessen horowitz (a16z)

- - -# Mistral 7B OpenOrca - GGUF -- Model creator: [OpenOrca](https://huggingface.co/Open-Orca) -- Original model: [Mistral 7B OpenOrca](https://huggingface.co/Open-Orca/Mistral-7B-OpenOrca) - - -## Description - -This repo contains GGUF format model files for [OpenOrca's Mistral 7B OpenOrca](https://huggingface.co/Open-Orca/Mistral-7B-OpenOrca). - - - -### About GGUF - -GGUF is a new format introduced by the llama.cpp team on August 21st 2023. It is a replacement for GGML, which is no longer supported by llama.cpp. - -Here is an incomplate list of clients and libraries that are known to support GGUF: - -* [llama.cpp](https://github.com/ggerganov/llama.cpp). The source project for GGUF. Offers a CLI and a server option. -* [text-generation-webui](https://github.com/oobabooga/text-generation-webui), the most widely used web UI, with many features and powerful extensions. Supports GPU acceleration. -* [KoboldCpp](https://github.com/LostRuins/koboldcpp), a fully featured web UI, with GPU accel across all platforms and GPU architectures. Especially good for story telling. -* [LM Studio](https://lmstudio.ai/), an easy-to-use and powerful local GUI for Windows and macOS (Silicon), with GPU acceleration. -* [LoLLMS Web UI](https://github.com/ParisNeo/lollms-webui), a great web UI with many interesting and unique features, including a full model library for easy model selection. -* [Faraday.dev](https://faraday.dev/), an attractive and easy to use character-based chat GUI for Windows and macOS (both Silicon and Intel), with GPU acceleration. -* [ctransformers](https://github.com/marella/ctransformers), a Python library with GPU accel, LangChain support, and OpenAI-compatible AI server. -* [llama-cpp-python](https://github.com/abetlen/llama-cpp-python), a Python library with GPU accel, LangChain support, and OpenAI-compatible API server. -* [candle](https://github.com/huggingface/candle), a Rust ML framework with a focus on performance, including GPU support, and ease of use. - - - -## Repositories available - -* [AWQ model(s) for GPU inference.](https://huggingface.co/TheBloke/Mistral-7B-OpenOrca-AWQ) -* [GPTQ models for GPU inference, with multiple quantisation parameter options.](https://huggingface.co/TheBloke/Mistral-7B-OpenOrca-GPTQ) -* [2, 3, 4, 5, 6 and 8-bit GGUF models for CPU+GPU inference](https://huggingface.co/TheBloke/Mistral-7B-OpenOrca-GGUF) -* [OpenOrca's original unquantised fp16 model in pytorch format, for GPU inference and for further conversions](https://huggingface.co/Open-Orca/Mistral-7B-OpenOrca) - - - -## Prompt template: ChatML - -``` -<|im_start|>system -{system_message}<|im_end|> -<|im_start|>user -{prompt}<|im_end|> -<|im_start|>assistant - -``` - - - - - -## Compatibility - -These quantised GGUFv2 files are compatible with llama.cpp from August 27th onwards, as of commit [d0cee0d](https://github.com/ggerganov/llama.cpp/commit/d0cee0d36d5be95a0d9088b674dbb27354107221) - -They are also compatible with many third party UIs and libraries - please see the list at the top of this README. - -## Explanation of quantisation methods -

-

-

-

-

-## Provided files

-

-| Name | Quant method | Bits | Size | Max RAM required | Use case |

-| ---- | ---- | ---- | ---- | ---- | ----- |

-| [mistral-7b-openorca.Q2_K.gguf](https://huggingface.co/TheBloke/Mistral-7B-OpenOrca-GGUF/blob/main/mistral-7b-openorca.Q2_K.gguf) | Q2_K | 2 | 3.08 GB| 5.58 GB | smallest, significant quality loss - not recommended for most purposes |

-| [mistral-7b-openorca.Q3_K_S.gguf](https://huggingface.co/TheBloke/Mistral-7B-OpenOrca-GGUF/blob/main/mistral-7b-openorca.Q3_K_S.gguf) | Q3_K_S | 3 | 3.16 GB| 5.66 GB | very small, high quality loss |

-| [mistral-7b-openorca.Q3_K_M.gguf](https://huggingface.co/TheBloke/Mistral-7B-OpenOrca-GGUF/blob/main/mistral-7b-openorca.Q3_K_M.gguf) | Q3_K_M | 3 | 3.52 GB| 6.02 GB | very small, high quality loss |

-| [mistral-7b-openorca.Q3_K_L.gguf](https://huggingface.co/TheBloke/Mistral-7B-OpenOrca-GGUF/blob/main/mistral-7b-openorca.Q3_K_L.gguf) | Q3_K_L | 3 | 3.82 GB| 6.32 GB | small, substantial quality loss |

-| [mistral-7b-openorca.Q4_0.gguf](https://huggingface.co/TheBloke/Mistral-7B-OpenOrca-GGUF/blob/main/mistral-7b-openorca.Q4_0.gguf) | Q4_0 | 4 | 4.11 GB| 6.61 GB | legacy; small, very high quality loss - prefer using Q3_K_M |

-| [mistral-7b-openorca.Q4_K_S.gguf](https://huggingface.co/TheBloke/Mistral-7B-OpenOrca-GGUF/blob/main/mistral-7b-openorca.Q4_K_S.gguf) | Q4_K_S | 4 | 4.14 GB| 6.64 GB | small, greater quality loss |

-| [mistral-7b-openorca.Q4_K_M.gguf](https://huggingface.co/TheBloke/Mistral-7B-OpenOrca-GGUF/blob/main/mistral-7b-openorca.Q4_K_M.gguf) | Q4_K_M | 4 | 4.37 GB| 6.87 GB | medium, balanced quality - recommended |

-| [mistral-7b-openorca.Q5_0.gguf](https://huggingface.co/TheBloke/Mistral-7B-OpenOrca-GGUF/blob/main/mistral-7b-openorca.Q5_0.gguf) | Q5_0 | 5 | 5.00 GB| 7.50 GB | legacy; medium, balanced quality - prefer using Q4_K_M |

-| [mistral-7b-openorca.Q5_K_S.gguf](https://huggingface.co/TheBloke/Mistral-7B-OpenOrca-GGUF/blob/main/mistral-7b-openorca.Q5_K_S.gguf) | Q5_K_S | 5 | 5.00 GB| 7.50 GB | large, low quality loss - recommended |

-| [mistral-7b-openorca.Q5_K_M.gguf](https://huggingface.co/TheBloke/Mistral-7B-OpenOrca-GGUF/blob/main/mistral-7b-openorca.Q5_K_M.gguf) | Q5_K_M | 5 | 5.13 GB| 7.63 GB | large, very low quality loss - recommended |

-| [mistral-7b-openorca.Q6_K.gguf](https://huggingface.co/TheBloke/Mistral-7B-OpenOrca-GGUF/blob/main/mistral-7b-openorca.Q6_K.gguf) | Q6_K | 6 | 5.94 GB| 8.44 GB | very large, extremely low quality loss |

-| [mistral-7b-openorca.Q8_0.gguf](https://huggingface.co/TheBloke/Mistral-7B-OpenOrca-GGUF/blob/main/mistral-7b-openorca.Q8_0.gguf) | Q8_0 | 8 | 7.70 GB| 10.20 GB | very large, extremely low quality loss - not recommended |

-

-**Note**: the above RAM figures assume no GPU offloading. If layers are offloaded to the GPU, this will reduce RAM usage and use VRAM instead.

-

-

-

-

-

-

-## How to download GGUF files

-

-**Note for manual downloaders:** You almost never want to clone the entire repo! Multiple different quantisation formats are provided, and most users only want to pick and download a single file.

-

-The following clients/libraries will automatically download models for you, providing a list of available models to choose from:

-- LM Studio

-- LoLLMS Web UI

-- Faraday.dev

-

-### In `text-generation-webui`

-

-Under Download Model, you can enter the model repo: TheBloke/Mistral-7B-OpenOrca-GGUF and below it, a specific filename to download, such as: mistral-7b-openorca.Q4_K_M.gguf.

-

-Then click Download.

-

-### On the command line, including multiple files at once

-

-I recommend using the `huggingface-hub` Python library:

-

-```shell

-pip3 install huggingface-hub

-```

-

-Then you can download any individual model file to the current directory, at high speed, with a command like this:

-

-```shell

-huggingface-cli download TheBloke/Mistral-7B-OpenOrca-GGUF mistral-7b-openorca.Q4_K_M.gguf --local-dir . --local-dir-use-symlinks False

-```

-

-Click to see details

- -The new methods available are: -* GGML_TYPE_Q2_K - "type-1" 2-bit quantization in super-blocks containing 16 blocks, each block having 16 weight. Block scales and mins are quantized with 4 bits. This ends up effectively using 2.5625 bits per weight (bpw) -* GGML_TYPE_Q3_K - "type-0" 3-bit quantization in super-blocks containing 16 blocks, each block having 16 weights. Scales are quantized with 6 bits. This end up using 3.4375 bpw. -* GGML_TYPE_Q4_K - "type-1" 4-bit quantization in super-blocks containing 8 blocks, each block having 32 weights. Scales and mins are quantized with 6 bits. This ends up using 4.5 bpw. -* GGML_TYPE_Q5_K - "type-1" 5-bit quantization. Same super-block structure as GGML_TYPE_Q4_K resulting in 5.5 bpw -* GGML_TYPE_Q6_K - "type-0" 6-bit quantization. Super-blocks with 16 blocks, each block having 16 weights. Scales are quantized with 8 bits. This ends up using 6.5625 bpw - -Refer to the Provided Files table below to see what files use which methods, and how. -

-

-

-

-

-## Example `llama.cpp` command

-

-Make sure you are using `llama.cpp` from commit [d0cee0d](https://github.com/ggerganov/llama.cpp/commit/d0cee0d36d5be95a0d9088b674dbb27354107221) or later.

-

-```shell

-./main -ngl 32 -m mistral-7b-openorca.Q4_K_M.gguf --color -c 2048 --temp 0.7 --repeat_penalty 1.1 -n -1 -p "<|im_start|>system\n{system_message}<|im_end|>\n<|im_start|>user\n{prompt}<|im_end|>\n<|im_start|>assistant"

-```

-

-Change `-ngl 32` to the number of layers to offload to GPU. Remove it if you don't have GPU acceleration.

-

-Change `-c 2048` to the desired sequence length. For extended sequence models - eg 8K, 16K, 32K - the necessary RoPE scaling parameters are read from the GGUF file and set by llama.cpp automatically.

-

-If you want to have a chat-style conversation, replace the `-p More advanced huggingface-cli download usage

- -You can also download multiple files at once with a pattern: - -```shell -huggingface-cli download TheBloke/Mistral-7B-OpenOrca-GGUF --local-dir . --local-dir-use-symlinks False --include='*Q4_K*gguf' -``` - -For more documentation on downloading with `huggingface-cli`, please see: [HF -> Hub Python Library -> Download files -> Download from the CLI](https://huggingface.co/docs/huggingface_hub/guides/download#download-from-the-cli). - -To accelerate downloads on fast connections (1Gbit/s or higher), install `hf_transfer`: - -```shell -pip3 install hf_transfer -``` - -And set environment variable `HF_HUB_ENABLE_HF_TRANSFER` to `1`: - -```shell -HF_HUB_ENABLE_HF_TRANSFER=1 huggingface-cli download TheBloke/Mistral-7B-OpenOrca-GGUF mistral-7b-openorca.Q4_K_M.gguf --local-dir . --local-dir-use-symlinks False -``` - -Windows Command Line users: You can set the environment variable by running `set HF_HUB_ENABLE_HF_TRANSFER=1` before the download command. -🐋 Mistral-7B-OpenOrca 🐋

- - - -[ ](https://atlas.nomic.ai/map/c1b88b47-2d9b-47e0-9002-b80766792582/2560fd25-52fe-42f1-a58f-ff5eccc890d2)

-

-

-We are in-process with training more models, so keep a look out on our org for releases coming soon with exciting partners.

-

-We will also give sneak-peak announcements on our Discord, which you can find here:

-

-https://AlignmentLab.ai

-

-or check the OpenAccess AI Collective Discord for more information about Axolotl trainer here:

-

-https://discord.gg/5y8STgB3P3

-

-

-# Quantized Models

-

-Quantized versions of this model are generously made available by [TheBloke](https://huggingface.co/TheBloke).

-

-- AWQ: https://huggingface.co/TheBloke/Mistral-7B-OpenOrca-AWQ

-- GPTQ: https://huggingface.co/TheBloke/Mistral-7B-OpenOrca-GPTQ

-- GGUF: https://huggingface.co/TheBloke/Mistral-7B-OpenOrca-GGUF

-

-

-# Prompt Template

-

-We used [OpenAI's Chat Markup Language (ChatML)](https://github.com/openai/openai-python/blob/main/chatml.md) format, with `<|im_start|>` and `<|im_end|>` tokens added to support this.

-

-## Example Prompt Exchange

-

-```

-<|im_start|>system

-You are MistralOrca, a large language model trained by Alignment Lab AI. Write out your reasoning step-by-step to be sure you get the right answers!

-<|im_end|>

-<|im_start|>user

-How are you?<|im_end|>

-<|im_start|>assistant

-I am doing well!<|im_end|>

-<|im_start|>user

-Please tell me about how mistral winds have attracted super-orcas.<|im_end|>

-```

-

-

-# Evaluation

-

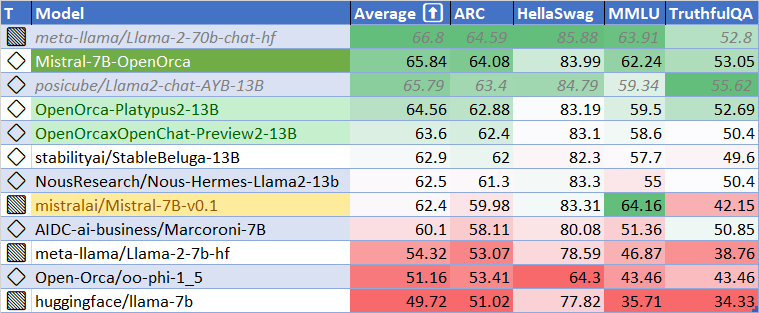

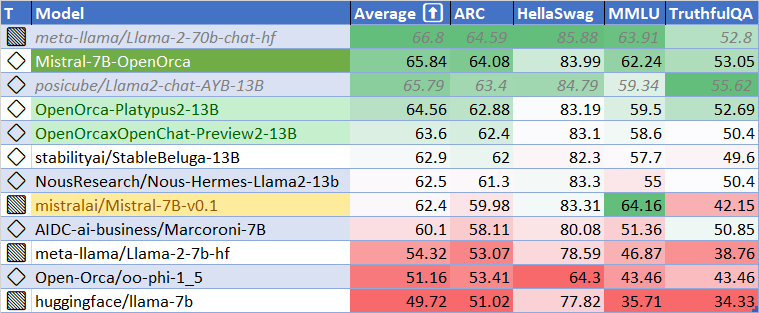

-## HuggingFace Leaderboard Performance

-

-We have evaluated using the methodology and tools for the HuggingFace Leaderboard, and find that we have dramatically improved upon the base model.

-We find **105%** of the base model's performance on HF Leaderboard evals, averaging **65.33**.

-

-At release time, this beats all 7B models, and all but one 13B.

-

-

-

-

-| Metric | Value |

-|-----------------------|-------|

-| MMLU (5-shot) | 61.73 |

-| ARC (25-shot) | 63.57 |

-| HellaSwag (10-shot) | 83.79 |

-| TruthfulQA (0-shot) | 52.24 |

-| Avg. | 65.33 |

-

-We use [Language Model Evaluation Harness](https://github.com/EleutherAI/lm-evaluation-harness) to run the benchmark tests above, using the same version as the HuggingFace LLM Leaderboard.

-

-

-## AGIEval Performance

-

-We compare our results to the base Mistral-7B model (using LM Evaluation Harness).

-

-We find **129%** of the base model's performance on AGI Eval, averaging **0.397**.

-As well, we significantly improve upon the official `mistralai/Mistral-7B-Instruct-v0.1` finetuning, achieving **119%** of their performance.

-

-

-

-## BigBench-Hard Performance

-

-We find **119%** of the base model's performance on BigBench-Hard, averaging **0.416**.

-

-

-

-

-# Dataset

-

-We used a curated, filtered selection of most of the GPT-4 augmented data from our OpenOrca dataset, which aims to reproduce the Orca Research Paper dataset.

-

-

-# Training

-

-We trained with 8x A6000 GPUs for 62 hours, completing 4 epochs of full fine tuning on our dataset in one training run.

-Commodity cost was ~$400.

-

-

-# Citation

-

-```bibtex

-@software{lian2023mistralorca1

- title = {MistralOrca: Mistral-7B Model Instruct-tuned on Filtered OpenOrcaV1 GPT-4 Dataset},

- author = {Wing Lian and Bleys Goodson and Guan Wang and Eugene Pentland and Austin Cook and Chanvichet Vong and "Teknium"},

- year = {2023},

- publisher = {HuggingFace},

- journal = {HuggingFace repository},

- howpublished = {\url{https://huggingface.co/Open-Orca/Mistral-7B-OpenOrca},

-}

-@misc{mukherjee2023orca,

- title={Orca: Progressive Learning from Complex Explanation Traces of GPT-4},

- author={Subhabrata Mukherjee and Arindam Mitra and Ganesh Jawahar and Sahaj Agarwal and Hamid Palangi and Ahmed Awadallah},

- year={2023},

- eprint={2306.02707},

- archivePrefix={arXiv},

- primaryClass={cs.CL}

-}

-@misc{longpre2023flan,

- title={The Flan Collection: Designing Data and Methods for Effective Instruction Tuning},

- author={Shayne Longpre and Le Hou and Tu Vu and Albert Webson and Hyung Won Chung and Yi Tay and Denny Zhou and Quoc V. Le and Barret Zoph and Jason Wei and Adam Roberts},

- year={2023},

- eprint={2301.13688},

- archivePrefix={arXiv},

- primaryClass={cs.AI}

-}

-```

-

-

diff --git a/swarms/modelui/models/config.json b/swarms/modelui/models/config.json

deleted file mode 100644

index 9f0f76f8..00000000

--- a/swarms/modelui/models/config.json

+++ /dev/null

@@ -1,3 +0,0 @@

-{

- "model_type": "mistral"

-}

\ No newline at end of file

diff --git a/swarms/modelui/models/config.yaml b/swarms/modelui/models/config.yaml

deleted file mode 100644

index 00db01d1..00000000

--- a/swarms/modelui/models/config.yaml

+++ /dev/null

@@ -1,182 +0,0 @@

-.*(llama|alpac|vicuna|guanaco|koala|llava|wizardlm|metharme|pygmalion-7b|pygmalion-2|mythalion|wizard-mega|openbuddy|vigogne|h2ogpt-research|manticore):

- model_type: 'llama'

-.*(opt-|opt_|opt1|opt3|optfor|galactica|galpaca|pygmalion-350m):

- model_type: 'opt'

-.*(gpt-j|gptj|gpt4all-j|malion-6b|pygway|pygmalion-6b|dolly-v1):

- model_type: 'gptj'

-.*(gpt-neox|koalpaca-polyglot|polyglot.*koalpaca|polyglot-ko|polyglot_ko|pythia|stablelm|incite|dolly-v2|polycoder|h2ogpt-oig|h2ogpt-oasst1|h2ogpt-gm):

- model_type: 'gptneox'

-.*bloom:

- model_type: 'bloom'

-.*gpt2:

- model_type: 'gpt2'

-.*falcon:

- model_type: 'falcon'

-.*mpt:

- model_type: 'mpt'

-.*(starcoder|starchat):

- model_type: 'starcoder'

-.*dolly-v2:

- model_type: 'dollyv2'

-.*replit:

- model_type: 'replit'

-.*(oasst|openassistant-|stablelm-7b-sft-v7-epoch-3):

- instruction_template: 'Open Assistant'

- skip_special_tokens: false

-(?!.*galactica)(?!.*reward).*openassistant:

- instruction_template: 'Open Assistant'

- skip_special_tokens: false

-.*galactica:

- skip_special_tokens: false

-.*dolly-v[0-9]-[0-9]*b:

- instruction_template: 'Alpaca'

- skip_special_tokens: false

-.*alpaca-native-4bit:

- instruction_template: 'Alpaca'

- custom_stopping_strings: '"### End"'

-.*llava:

- instruction_template: 'LLaVA'

- custom_stopping_strings: '"\n###"'

-.*llava.*1.5:

- instruction_template: 'LLaVA-v1'

-.*wizard.*mega:

- instruction_template: 'Wizard-Mega'

- custom_stopping_strings: '""'

-.*starchat-beta:

- instruction_template: 'Starchat-Beta'

- custom_stopping_strings: '"<|end|>"'

-(?!.*v0)(?!.*1.1)(?!.*1_1)(?!.*stable)(?!.*chinese).*vicuna:

- instruction_template: 'Vicuna-v0'

-.*vicuna.*v0:

- instruction_template: 'Vicuna-v0'

-.*vicuna.*(1.1|1_1|1.3|1_3):

- instruction_template: 'Vicuna-v1.1'

-.*vicuna.*(1.5|1_5):

- instruction_template: 'Vicuna-v1.1'

-.*stable.*vicuna:

- instruction_template: 'StableVicuna'

-(?!.*chat).*chinese-vicuna:

- instruction_template: 'Alpaca'

-.*chinese-vicuna.*chat:

- instruction_template: 'Chinese-Vicuna-Chat'

-.*alpaca:

- instruction_template: 'Alpaca'

-.*koala:

- instruction_template: 'Koala'

-.*chatglm:

- instruction_template: 'ChatGLM'

-.*(metharme|pygmalion|mythalion):

- instruction_template: 'Metharme'

-.*raven:

- instruction_template: 'RWKV-Raven'

-.*moss-moon.*sft:

- instruction_template: 'MOSS'

-.*stablelm-tuned:

- instruction_template: 'StableLM'

-.*galactica.*finetuned:

- instruction_template: 'Galactica Finetuned'

-.*galactica.*-v2:

- instruction_template: 'Galactica v2'

-(?!.*finetuned)(?!.*-v2).*galactica:

- instruction_template: 'Galactica'

-.*guanaco:

- instruction_template: 'Guanaco non-chat'

-.*baize:

- instruction_template: 'Baize'

-.*mpt-.*instruct:

- instruction_template: 'Alpaca'

-.*mpt-.*chat:

- instruction_template: 'ChatML'

-(?!.*-flan-)(?!.*-t5-).*lamini-:

- instruction_template: 'Alpaca'

-.*incite.*chat:

- instruction_template: 'INCITE-Chat'

-.*incite.*instruct:

- instruction_template: 'INCITE-Instruct'

-.*ziya-:

- instruction_template: 'Ziya'

-.*koalpaca:

- instruction_template: 'KoAlpaca'

-.*openbuddy:

- instruction_template: 'OpenBuddy'

-(?!.*chat).*vigogne:

- instruction_template: 'Vigogne-Instruct'

-.*vigogne.*chat:

- instruction_template: 'Vigogne-Chat'

-.*(llama-deus|supercot|llama-natural-instructions|open-llama-0.3t-7b-instruct-dolly-hhrlhf|open-llama-0.3t-7b-open-instruct):

- instruction_template: 'Alpaca'

-.*bactrian:

- instruction_template: 'Bactrian'

-.*(h2ogpt-oig-|h2ogpt-oasst1-|h2ogpt-research-oasst1-):

- instruction_template: 'H2O-human_bot'

-.*h2ogpt-gm-:

- instruction_template: 'H2O-prompt_answer'

-.*manticore:

- instruction_template: 'Manticore Chat'

-.*bluemoonrp-(30|13)b:

- instruction_template: 'Bluemoon'

-.*Nous-Hermes-13b:

- instruction_template: 'Alpaca'

-.*airoboros:

- instruction_template: 'Vicuna-v1.1'

-.*airoboros.*1.2:

- instruction_template: 'Airoboros-v1.2'

-.*alpa(cino|sta):

- instruction_template: 'Alpaca'

-.*hippogriff:

- instruction_template: 'Hippogriff'

-.*lazarus:

- instruction_template: 'Alpaca'

-.*guanaco-.*(7|13|33|65)b:

- instruction_template: 'Guanaco'

-.*hypermantis:

- instruction_template: 'Alpaca'

-.*open-llama-.*-open-instruct:

- instruction_template: 'Alpaca'

-.*starcoder-gpteacher-code-instruct:

- instruction_template: 'Alpaca'

-.*tulu:

- instruction_template: 'Tulu'

-.*chronos:

- instruction_template: 'Alpaca'

-.*samantha:

- instruction_template: 'Samantha'

-.*wizardcoder:

- instruction_template: 'Alpaca'

-.*minotaur:

- instruction_template: 'Minotaur'

-.*orca_mini:

- instruction_template: 'Orca Mini'

-.*(platypus|gplatty|superplatty):

- instruction_template: 'Alpaca'

-.*(openorca-platypus2):

- instruction_template: 'OpenOrca-Platypus2'

- custom_stopping_strings: '"### Instruction:", "### Response:"'

-.*longchat:

- instruction_template: 'Vicuna-v1.1'

-.*vicuna-33b:

- instruction_template: 'Vicuna-v1.1'

-.*redmond-hermes-coder:

- instruction_template: 'Alpaca'

-.*wizardcoder-15b:

- instruction_template: 'Alpaca'

-.*wizardlm:

- instruction_template: 'Vicuna-v1.1'

-.*godzilla:

- instruction_template: 'Alpaca'

-.*llama(-?)(2|v2).*chat:

- instruction_template: 'Llama-v2'

-.*newhope:

- instruction_template: 'NewHope'

-.*stablebeluga2:

- instruction_template: 'StableBeluga2'

-.*openchat:

- instruction_template: 'OpenChat'

-.*codellama.*instruct:

- instruction_template: 'Llama-v2'

-.*mistral.*instruct:

- instruction_template: 'Mistral'

-.*mistral.*openorca:

- instruction_template: 'ChatML'

-.*(WizardCoder-Python-34B-V1.0|Phind-CodeLlama-34B-v2|CodeBooga-34B-v0.1):

- instruction_template: 'Alpaca'

diff --git a/swarms/modelui/modules/ui_model_menu.py b/swarms/modelui/modules/ui_model_menu.py

index f78bc603..2b38dafd 100644

--- a/swarms/modelui/modules/ui_model_menu.py

+++ b/swarms/modelui/modules/ui_model_menu.py

@@ -235,7 +235,7 @@ def load_lora_wrapper(selected_loras):

def download_model_wrapper(repo_id, specific_file, progress=gr.Progress(), return_links=False, check=False):

try:

progress(0.0)

- downloader = importlib.import_module("download-model").ModelDownloader()

+ downloader = importlib.import_module("swarms.modelui.download_model").ModelDownloader()

model, branch = downloader.sanitize_model_and_branch_names(repo_id, None)

yield ("Getting the download links from Hugging Face")

links, sha256, is_lora, is_llamacpp = downloader.get_download_links_from_huggingface(model, branch, text_only=False, specific_file=specific_file)

](https://atlas.nomic.ai/map/c1b88b47-2d9b-47e0-9002-b80766792582/2560fd25-52fe-42f1-a58f-ff5eccc890d2)

-

-

-We are in-process with training more models, so keep a look out on our org for releases coming soon with exciting partners.

-

-We will also give sneak-peak announcements on our Discord, which you can find here:

-

-https://AlignmentLab.ai

-

-or check the OpenAccess AI Collective Discord for more information about Axolotl trainer here:

-

-https://discord.gg/5y8STgB3P3

-

-

-# Quantized Models

-

-Quantized versions of this model are generously made available by [TheBloke](https://huggingface.co/TheBloke).

-

-- AWQ: https://huggingface.co/TheBloke/Mistral-7B-OpenOrca-AWQ

-- GPTQ: https://huggingface.co/TheBloke/Mistral-7B-OpenOrca-GPTQ

-- GGUF: https://huggingface.co/TheBloke/Mistral-7B-OpenOrca-GGUF

-

-

-# Prompt Template

-

-We used [OpenAI's Chat Markup Language (ChatML)](https://github.com/openai/openai-python/blob/main/chatml.md) format, with `<|im_start|>` and `<|im_end|>` tokens added to support this.

-

-## Example Prompt Exchange

-

-```

-<|im_start|>system

-You are MistralOrca, a large language model trained by Alignment Lab AI. Write out your reasoning step-by-step to be sure you get the right answers!

-<|im_end|>

-<|im_start|>user

-How are you?<|im_end|>

-<|im_start|>assistant

-I am doing well!<|im_end|>

-<|im_start|>user

-Please tell me about how mistral winds have attracted super-orcas.<|im_end|>

-```

-

-

-# Evaluation

-

-## HuggingFace Leaderboard Performance

-

-We have evaluated using the methodology and tools for the HuggingFace Leaderboard, and find that we have dramatically improved upon the base model.

-We find **105%** of the base model's performance on HF Leaderboard evals, averaging **65.33**.

-

-At release time, this beats all 7B models, and all but one 13B.

-

-

-

-

-| Metric | Value |

-|-----------------------|-------|

-| MMLU (5-shot) | 61.73 |

-| ARC (25-shot) | 63.57 |

-| HellaSwag (10-shot) | 83.79 |

-| TruthfulQA (0-shot) | 52.24 |

-| Avg. | 65.33 |

-

-We use [Language Model Evaluation Harness](https://github.com/EleutherAI/lm-evaluation-harness) to run the benchmark tests above, using the same version as the HuggingFace LLM Leaderboard.

-

-

-## AGIEval Performance

-

-We compare our results to the base Mistral-7B model (using LM Evaluation Harness).

-

-We find **129%** of the base model's performance on AGI Eval, averaging **0.397**.

-As well, we significantly improve upon the official `mistralai/Mistral-7B-Instruct-v0.1` finetuning, achieving **119%** of their performance.

-

-

-

-## BigBench-Hard Performance

-

-We find **119%** of the base model's performance on BigBench-Hard, averaging **0.416**.

-

-

-

-

-# Dataset

-

-We used a curated, filtered selection of most of the GPT-4 augmented data from our OpenOrca dataset, which aims to reproduce the Orca Research Paper dataset.

-

-

-# Training

-

-We trained with 8x A6000 GPUs for 62 hours, completing 4 epochs of full fine tuning on our dataset in one training run.

-Commodity cost was ~$400.

-

-

-# Citation

-

-```bibtex

-@software{lian2023mistralorca1

- title = {MistralOrca: Mistral-7B Model Instruct-tuned on Filtered OpenOrcaV1 GPT-4 Dataset},

- author = {Wing Lian and Bleys Goodson and Guan Wang and Eugene Pentland and Austin Cook and Chanvichet Vong and "Teknium"},

- year = {2023},

- publisher = {HuggingFace},

- journal = {HuggingFace repository},

- howpublished = {\url{https://huggingface.co/Open-Orca/Mistral-7B-OpenOrca},

-}

-@misc{mukherjee2023orca,

- title={Orca: Progressive Learning from Complex Explanation Traces of GPT-4},

- author={Subhabrata Mukherjee and Arindam Mitra and Ganesh Jawahar and Sahaj Agarwal and Hamid Palangi and Ahmed Awadallah},

- year={2023},

- eprint={2306.02707},

- archivePrefix={arXiv},

- primaryClass={cs.CL}

-}

-@misc{longpre2023flan,

- title={The Flan Collection: Designing Data and Methods for Effective Instruction Tuning},

- author={Shayne Longpre and Le Hou and Tu Vu and Albert Webson and Hyung Won Chung and Yi Tay and Denny Zhou and Quoc V. Le and Barret Zoph and Jason Wei and Adam Roberts},

- year={2023},

- eprint={2301.13688},

- archivePrefix={arXiv},

- primaryClass={cs.AI}

-}

-```

-

-

diff --git a/swarms/modelui/models/config.json b/swarms/modelui/models/config.json

deleted file mode 100644

index 9f0f76f8..00000000

--- a/swarms/modelui/models/config.json

+++ /dev/null

@@ -1,3 +0,0 @@

-{

- "model_type": "mistral"

-}

\ No newline at end of file

diff --git a/swarms/modelui/models/config.yaml b/swarms/modelui/models/config.yaml

deleted file mode 100644

index 00db01d1..00000000

--- a/swarms/modelui/models/config.yaml

+++ /dev/null

@@ -1,182 +0,0 @@

-.*(llama|alpac|vicuna|guanaco|koala|llava|wizardlm|metharme|pygmalion-7b|pygmalion-2|mythalion|wizard-mega|openbuddy|vigogne|h2ogpt-research|manticore):

- model_type: 'llama'

-.*(opt-|opt_|opt1|opt3|optfor|galactica|galpaca|pygmalion-350m):

- model_type: 'opt'

-.*(gpt-j|gptj|gpt4all-j|malion-6b|pygway|pygmalion-6b|dolly-v1):

- model_type: 'gptj'

-.*(gpt-neox|koalpaca-polyglot|polyglot.*koalpaca|polyglot-ko|polyglot_ko|pythia|stablelm|incite|dolly-v2|polycoder|h2ogpt-oig|h2ogpt-oasst1|h2ogpt-gm):

- model_type: 'gptneox'

-.*bloom:

- model_type: 'bloom'

-.*gpt2:

- model_type: 'gpt2'

-.*falcon:

- model_type: 'falcon'

-.*mpt:

- model_type: 'mpt'

-.*(starcoder|starchat):

- model_type: 'starcoder'

-.*dolly-v2:

- model_type: 'dollyv2'

-.*replit:

- model_type: 'replit'

-.*(oasst|openassistant-|stablelm-7b-sft-v7-epoch-3):

- instruction_template: 'Open Assistant'

- skip_special_tokens: false

-(?!.*galactica)(?!.*reward).*openassistant:

- instruction_template: 'Open Assistant'

- skip_special_tokens: false

-.*galactica:

- skip_special_tokens: false

-.*dolly-v[0-9]-[0-9]*b:

- instruction_template: 'Alpaca'

- skip_special_tokens: false

-.*alpaca-native-4bit:

- instruction_template: 'Alpaca'

- custom_stopping_strings: '"### End"'

-.*llava:

- instruction_template: 'LLaVA'

- custom_stopping_strings: '"\n###"'

-.*llava.*1.5:

- instruction_template: 'LLaVA-v1'

-.*wizard.*mega:

- instruction_template: 'Wizard-Mega'

- custom_stopping_strings: '""'

-.*starchat-beta:

- instruction_template: 'Starchat-Beta'

- custom_stopping_strings: '"<|end|>"'

-(?!.*v0)(?!.*1.1)(?!.*1_1)(?!.*stable)(?!.*chinese).*vicuna:

- instruction_template: 'Vicuna-v0'

-.*vicuna.*v0:

- instruction_template: 'Vicuna-v0'

-.*vicuna.*(1.1|1_1|1.3|1_3):

- instruction_template: 'Vicuna-v1.1'

-.*vicuna.*(1.5|1_5):

- instruction_template: 'Vicuna-v1.1'

-.*stable.*vicuna:

- instruction_template: 'StableVicuna'

-(?!.*chat).*chinese-vicuna:

- instruction_template: 'Alpaca'

-.*chinese-vicuna.*chat:

- instruction_template: 'Chinese-Vicuna-Chat'

-.*alpaca:

- instruction_template: 'Alpaca'

-.*koala:

- instruction_template: 'Koala'

-.*chatglm:

- instruction_template: 'ChatGLM'

-.*(metharme|pygmalion|mythalion):

- instruction_template: 'Metharme'

-.*raven:

- instruction_template: 'RWKV-Raven'

-.*moss-moon.*sft:

- instruction_template: 'MOSS'

-.*stablelm-tuned:

- instruction_template: 'StableLM'

-.*galactica.*finetuned:

- instruction_template: 'Galactica Finetuned'

-.*galactica.*-v2:

- instruction_template: 'Galactica v2'

-(?!.*finetuned)(?!.*-v2).*galactica:

- instruction_template: 'Galactica'

-.*guanaco:

- instruction_template: 'Guanaco non-chat'

-.*baize:

- instruction_template: 'Baize'

-.*mpt-.*instruct:

- instruction_template: 'Alpaca'

-.*mpt-.*chat:

- instruction_template: 'ChatML'

-(?!.*-flan-)(?!.*-t5-).*lamini-:

- instruction_template: 'Alpaca'

-.*incite.*chat:

- instruction_template: 'INCITE-Chat'

-.*incite.*instruct:

- instruction_template: 'INCITE-Instruct'

-.*ziya-:

- instruction_template: 'Ziya'

-.*koalpaca:

- instruction_template: 'KoAlpaca'

-.*openbuddy:

- instruction_template: 'OpenBuddy'

-(?!.*chat).*vigogne:

- instruction_template: 'Vigogne-Instruct'

-.*vigogne.*chat:

- instruction_template: 'Vigogne-Chat'

-.*(llama-deus|supercot|llama-natural-instructions|open-llama-0.3t-7b-instruct-dolly-hhrlhf|open-llama-0.3t-7b-open-instruct):

- instruction_template: 'Alpaca'

-.*bactrian:

- instruction_template: 'Bactrian'

-.*(h2ogpt-oig-|h2ogpt-oasst1-|h2ogpt-research-oasst1-):

- instruction_template: 'H2O-human_bot'

-.*h2ogpt-gm-:

- instruction_template: 'H2O-prompt_answer'

-.*manticore:

- instruction_template: 'Manticore Chat'

-.*bluemoonrp-(30|13)b:

- instruction_template: 'Bluemoon'

-.*Nous-Hermes-13b:

- instruction_template: 'Alpaca'

-.*airoboros:

- instruction_template: 'Vicuna-v1.1'

-.*airoboros.*1.2:

- instruction_template: 'Airoboros-v1.2'

-.*alpa(cino|sta):

- instruction_template: 'Alpaca'

-.*hippogriff:

- instruction_template: 'Hippogriff'

-.*lazarus:

- instruction_template: 'Alpaca'

-.*guanaco-.*(7|13|33|65)b:

- instruction_template: 'Guanaco'

-.*hypermantis:

- instruction_template: 'Alpaca'

-.*open-llama-.*-open-instruct:

- instruction_template: 'Alpaca'

-.*starcoder-gpteacher-code-instruct:

- instruction_template: 'Alpaca'

-.*tulu:

- instruction_template: 'Tulu'

-.*chronos:

- instruction_template: 'Alpaca'

-.*samantha:

- instruction_template: 'Samantha'

-.*wizardcoder:

- instruction_template: 'Alpaca'

-.*minotaur:

- instruction_template: 'Minotaur'

-.*orca_mini:

- instruction_template: 'Orca Mini'

-.*(platypus|gplatty|superplatty):

- instruction_template: 'Alpaca'

-.*(openorca-platypus2):

- instruction_template: 'OpenOrca-Platypus2'

- custom_stopping_strings: '"### Instruction:", "### Response:"'

-.*longchat:

- instruction_template: 'Vicuna-v1.1'

-.*vicuna-33b:

- instruction_template: 'Vicuna-v1.1'

-.*redmond-hermes-coder:

- instruction_template: 'Alpaca'

-.*wizardcoder-15b:

- instruction_template: 'Alpaca'

-.*wizardlm:

- instruction_template: 'Vicuna-v1.1'

-.*godzilla:

- instruction_template: 'Alpaca'

-.*llama(-?)(2|v2).*chat:

- instruction_template: 'Llama-v2'

-.*newhope:

- instruction_template: 'NewHope'

-.*stablebeluga2:

- instruction_template: 'StableBeluga2'

-.*openchat:

- instruction_template: 'OpenChat'

-.*codellama.*instruct:

- instruction_template: 'Llama-v2'

-.*mistral.*instruct:

- instruction_template: 'Mistral'

-.*mistral.*openorca:

- instruction_template: 'ChatML'

-.*(WizardCoder-Python-34B-V1.0|Phind-CodeLlama-34B-v2|CodeBooga-34B-v0.1):

- instruction_template: 'Alpaca'

diff --git a/swarms/modelui/modules/ui_model_menu.py b/swarms/modelui/modules/ui_model_menu.py

index f78bc603..2b38dafd 100644

--- a/swarms/modelui/modules/ui_model_menu.py

+++ b/swarms/modelui/modules/ui_model_menu.py

@@ -235,7 +235,7 @@ def load_lora_wrapper(selected_loras):

def download_model_wrapper(repo_id, specific_file, progress=gr.Progress(), return_links=False, check=False):

try:

progress(0.0)

- downloader = importlib.import_module("download-model").ModelDownloader()

+ downloader = importlib.import_module("swarms.modelui.download_model").ModelDownloader()

model, branch = downloader.sanitize_model_and_branch_names(repo_id, None)

yield ("Getting the download links from Hugging Face")

links, sha256, is_lora, is_llamacpp = downloader.get_download_links_from_huggingface(model, branch, text_only=False, specific_file=specific_file)