|

|

2 years ago | |

|---|---|---|

| .github | 2 years ago | |

| api | 2 years ago | |

| apps | 2 years ago | |

| docs | 2 years ago | |

| images | 2 years ago | |

| infra | 2 years ago | |

| playground | 2 years ago | |

| swarms | 2 years ago | |

| tests | 2 years ago | |

| .env.example | 2 years ago | |

| .gitignore | 2 years ago | |

| .pre-commit-config.yaml | 2 years ago | |

| .readthedocs.yml | 2 years ago | |

| CONTRIBUTING.md | 2 years ago | |

| Dockerfile | 2 years ago | |

| LICENSE | 2 years ago | |

| README.md | 2 years ago | |

| example.py | 2 years ago | |

| mkdocs.yml | 2 years ago | |

| pyproject.toml | 2 years ago | |

| requirements.txt | 2 years ago | |

| setup.py | 2 years ago | |

| stacked_worker.py | 2 years ago | |

README.md

Swarms is a modular framework that enables reliable and useful multi-agent collaboration at scale to automate real-world tasks.

Share on Social Media

Purpose

At Swarms, we're transforming the landscape of AI from siloed AI agents to a unified 'swarm' of intelligence. Through relentless iteration and the power of collective insight from our 1500+ Agora researchers, we're developing a groundbreaking framework for AI collaboration. Our mission is to catalyze a paradigm shift, advancing Humanity with the power of unified autonomous AI agent swarms.

🤝 Schedule a 1-on-1 Session

Book a 1-on-1 Session with Kye, the Creator, to discuss any issues, provide feedback, or explore how we can improve Swarms for you.

Hiring

We're hiring: Engineers, Researchers, Interns And, salesprofessionals to work on democratizing swarms, email me at with your story at kye@apac.ai

Installation

pip3 install swarms

Usage

We have a small gallery of examples to run here, for more check out the docs to build your own agent and or swarms!

MultiAgentDebate

MultiAgentDebateis a simple class that enables multi agent collaboration.

from swarms.workers import Worker

from swarms.swarms import MultiAgentDebate, select_speaker

from swarms.models import OpenAIChat

llm = OpenAIChat(

model_name='gpt-4',

openai_api_key="api-key",

temperature=0.5

)

node = Worker(

llm=llm,

ai_name="Optimus Prime",

ai_role="Worker in a swarm",

external_tools = None,

human_in_the_loop = False,

temperature = 0.5,

)

node2 = Worker(

llm=llm,

ai_name="Bumble Bee",

ai_role="Worker in a swarm",

external_tools = None,

human_in_the_loop = False,

temperature = 0.5,

)

node3 = Worker(

llm=llm,

ai_name="Bumble Bee",

ai_role="Worker in a swarm",

external_tools = None,

human_in_the_loop = False,

temperature = 0.5,

)

agents = [

node,

node2,

node3

]

# Initialize multi-agent debate with the selection function

debate = MultiAgentDebate(agents, select_speaker)

# Run task

task = "What were the winning boston marathon times for the past 5 years (ending in 2022)? Generate a table of the year, name, country of origin, and times."

results = debate.run(task, max_iters=4)

# Print results

for result in results:

print(f"Agent {result['agent']} responded: {result['response']}")

Worker

- The

Workeris an fully feature complete agent with an llm, tools, and a vectorstore for long term memory! - Place your api key as parameters in the llm if you choose!

- And, then place the openai api key in the Worker for the openai embedding model

from swarms.models import ChatOpenAI

from swarms.workers import Worker

llm = ChatOpenAI(

model_name='gpt-4',

openai_api_key="api-key",

temperature=0.5

)

node = Worker(

llm=llm,

ai_name="Optimus Prime",

#openai key for the embeddings

openai_api_key="sk-eee"

ai_role="Worker in a swarm",

external_tools = None,

human_in_the_loop = False,

temperature = 0.5,

)

task = "What were the winning boston marathon times for the past 5 years (ending in 2022)? Generate a table of the year, name, country of origin, and times."

response = node.run(task)

print(response)

OmniModalAgent

- OmniModal Agent is an LLM that access to 10+ multi-modal encoders and diffusers! It can generate images, videos, speech, music and so much more, get started with:

from swarms.models import OpenAIChat

from swarms.agents import OmniModalAgent

llm = OpenAIChat(model_name="gpt-4")

agent = OmniModalAgent(llm)

agent.run("Create a video of a swarm of fish")

- OmniModal Agent has a ui in the root called

python3 omni_ui.py

Documentation

For documentation, go here, swarms.apac.ai

NOTE: We need help building the documentation

Docker Setup

The docker file is located in the docker folder in the infra folder, click here and navigate here in your environment

-

Build the Docker image

-

You can build the Docker image using the provided Dockerfile. Navigate to the infra/Docker directory where the Dockerfiles are located.

-

For the CPU version, use:

docker build -t swarms-api:latest -f Dockerfile.cpu .

For the GPU version, use:

docker build -t swarms-api:gpu -f Dockerfile.gpu .

Run the Docker container

After building the Docker image, you can run the Swarms API in a Docker container. Replace your_redis_host and your_redis_port with your actual Redis host and port.

For the CPU version:

docker run -p 8000:8000 -e REDIS_HOST=your_redis_host -e REDIS_PORT=your_redis_port swarms-api:latest

For the GPU version:

docker run --gpus all -p 8000:8000 -e REDIS_HOST=your_redis_host -e REDIS_PORT=your_redis_port swarms-api:gpu

Access the Swarms API

- The Swarms API will be accessible at http://localhost:8000. You can use tools like curl or Postman to send requests to the API.

Here's an example curl command to send a POST request to the /chat endpoint:

curl -X POST -H "Content-Type: application/json" -d '{"api_key": "your_openai_api_key", "objective": "your_objective"}' http://localhost:8000/chat

Replace your_openai_api_key and your_objective with your actual OpenAI API key and objective.

✨ Features

- Easy to use Base LLMs,

OpenAIPalmAnthropicHuggingFace - Enterprise Grade, Production Ready with robust Error Handling

- Multi-Modality Native with Multi-Modal LLMs as tools

- Infinite Memory Processing: Store infinite sequences of infinite Multi-Modal data, text, images, videos, audio

- Usability: Extreme emphasis on useability, code is at it's theortical minimum simplicity factor to use

- Reliability: Outputs that accomplish tasks and activities you wish to execute.

- Fluidity: A seamless all-around experience to build production grade workflows

- Speed: Lower the time to automate tasks by 90%.

- Simplicity: Swarms is extremely simple to use, if not thee simplest agent framework of all time

- Powerful: Swarms is capable of building entire software apps, to large scale data analysis, and handling chaotic situations

Contribute

We're always looking for contributors to help us improve and expand this project. If you're interested, please check out our Contributing Guidelines.

Thank you for being a part of our project!

Roadmap

Please checkout our Roadmap and consider contributing to make the dream of Swarms real to advance Humanity.

Optimization Priorities

-

Reliability: Increase the reliability of the swarm - obtaining the desired output with a basic and un-detailed input.

-

Speed: Reduce the time it takes for the swarm to accomplish tasks by improving the communication layer, critiquing, and self-alignment with meta prompting.

-

Scalability: Ensure that the system is asynchronous, concurrent, and self-healing to support scalability.

Our goal is to continuously improve Swarms by following this roadmap, while also being adaptable to new needs and opportunities as they arise.

Bounty Program

Our bounty program is an exciting opportunity for contributors to help us build the future of Swarms. By participating, you can earn rewards while contributing to a project that aims to revolutionize digital activity.

Here's how it works:

-

Check out our Roadmap: We've shared our roadmap detailing our short and long-term goals. These are the areas where we're seeking contributions.

-

Pick a Task: Choose a task from the roadmap that aligns with your skills and interests. If you're unsure, you can reach out to our team for guidance.

-

Get to Work: Once you've chosen a task, start working on it. Remember, quality is key. We're looking for contributions that truly make a difference.

-

Submit your Contribution: Once your work is complete, submit it for review. We'll evaluate your contribution based on its quality, relevance, and the value it brings to Swarms.

-

Earn Rewards: If your contribution is approved, you'll earn a bounty. The amount of the bounty depends on the complexity of the task, the quality of your work, and the value it brings to Swarms.

The Plan

Phase 1: Building the Foundation

In the first phase, our focus is on building the basic infrastructure of Swarms. This includes developing key components like the Swarms class, integrating essential tools, and establishing task completion and evaluation logic. We'll also start developing our testing and evaluation framework during this phase. If you're interested in foundational work and have a knack for building robust, scalable systems, this phase is for you.

Phase 2: Optimizing the System

In the second phase, we'll focus on optimizng Swarms by integrating more advanced features, improving the system's efficiency, and refining our testing and evaluation framework. This phase involves more complex tasks, so if you enjoy tackling challenging problems and contributing to the development of innovative features, this is the phase for you.

Phase 3: Towards Super-Intelligence

The third phase of our bounty program is the most exciting - this is where we aim to achieve super-intelligence. In this phase, we'll be working on improving the swarm's capabilities, expanding its skills, and fine-tuning the system based on real-world testing and feedback. If you're excited about the future of AI and want to contribute to a project that could potentially transform the digital world, this is the phase for you.

Remember, our roadmap is a guide, and we encourage you to bring your own ideas and creativity to the table. We believe that every contribution, no matter how small, can make a difference. So join us on this exciting journey and help us create the future of Swarms.

EcoSystem

-

Make a swarm that checks arxviv for papers -> checks if there is a github link -> then implements them and checks them

-

SwarmLogic, where a swarm is your API, database, and backend!

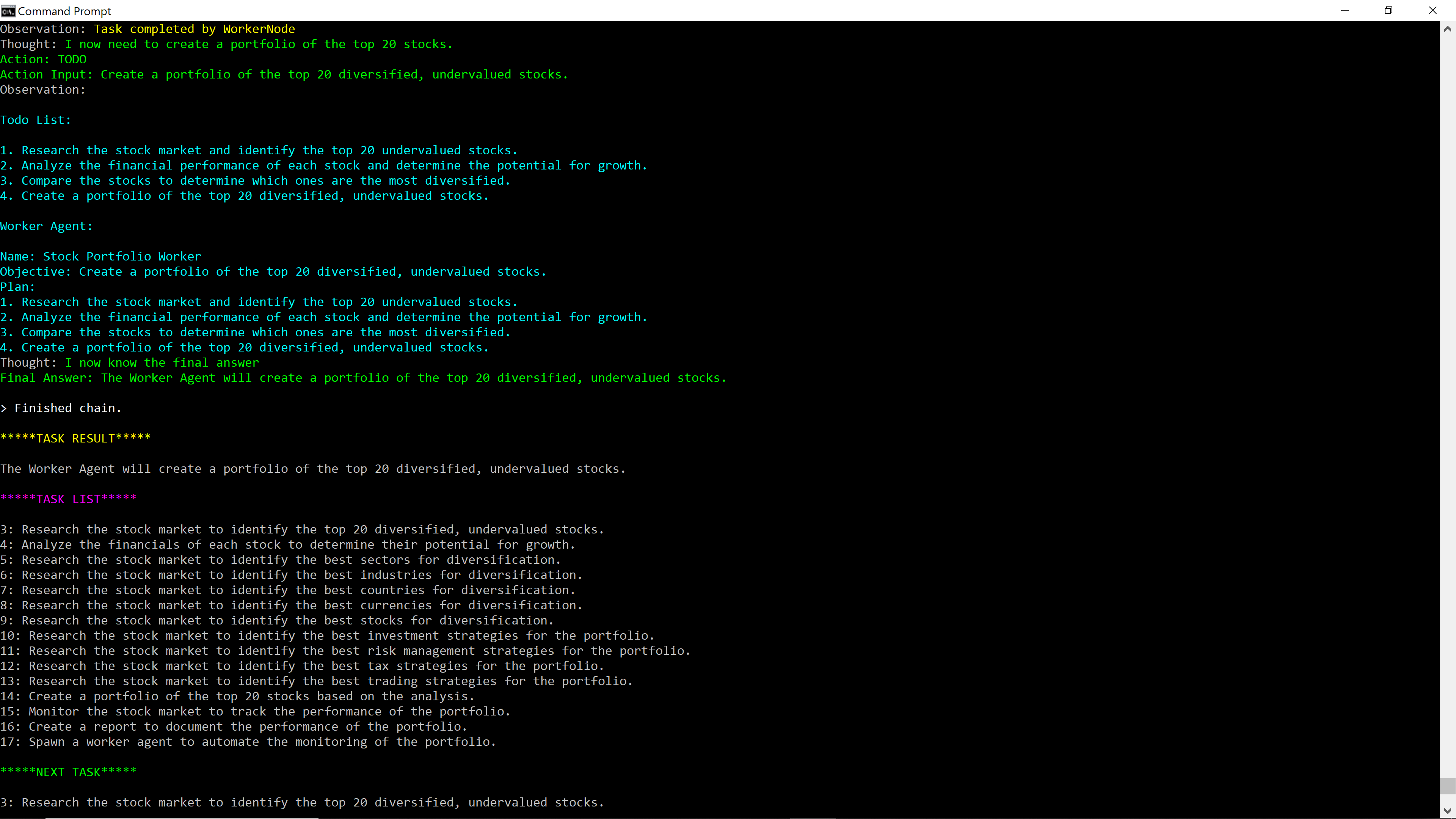

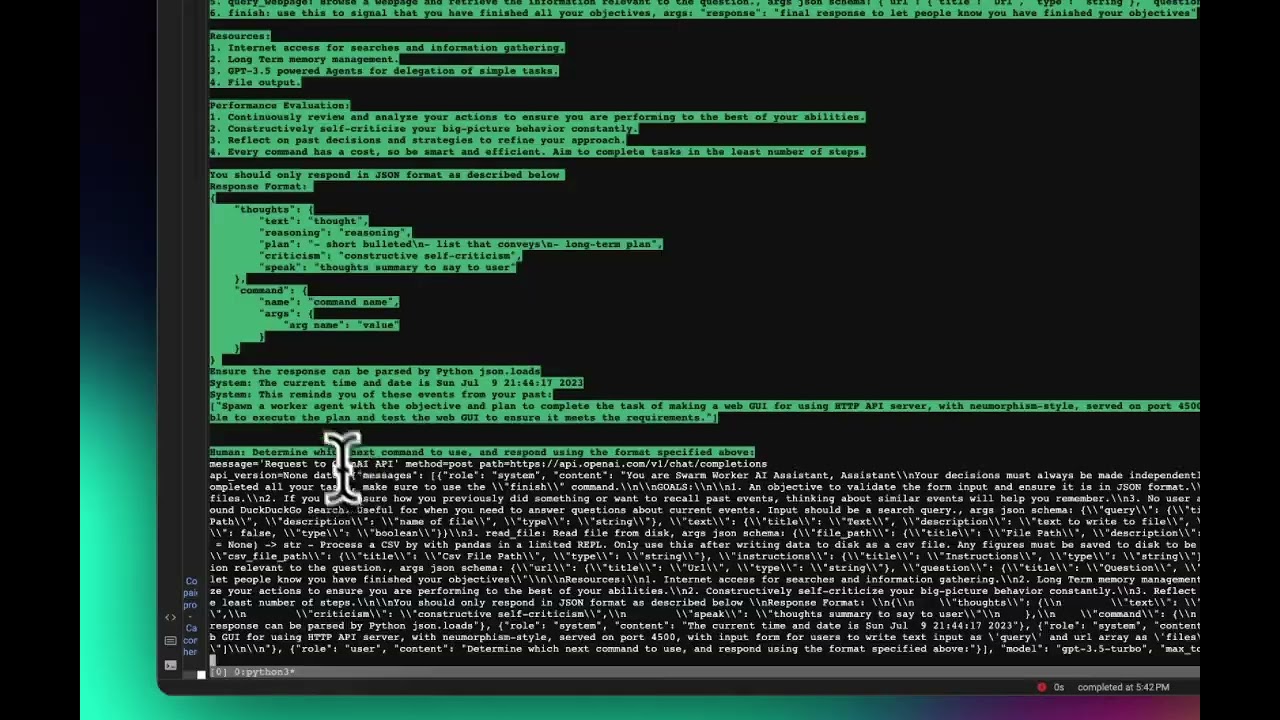

Demos

Swarm Video Demo {Click for more}

Contact

For enterprise and production ready deployments, allow us to discover more about you and your story, book a call with us here